Liquid Cooling vs Air Cooling for AI Data Centers: 2025 Cost-Benefit Analysis

Air cooling runs out of physics at exactly 41.3kW per rack. Beyond that threshold, the volume of air required to remove heat exceeds what any practical design can deliver, creating acoustic nightmares and thermal chaos that no amount of engineering can solve.¹ Liquid cooling promises salvation through superior thermodynamics, but at costs that make CFOs question their sanity: $2-3 million per megawatt for retrofit installations.² The choice between air and liquid cooling determines not just infrastructure budgets but competitive viability in AI markets where milliseconds separate winners from also-rans.

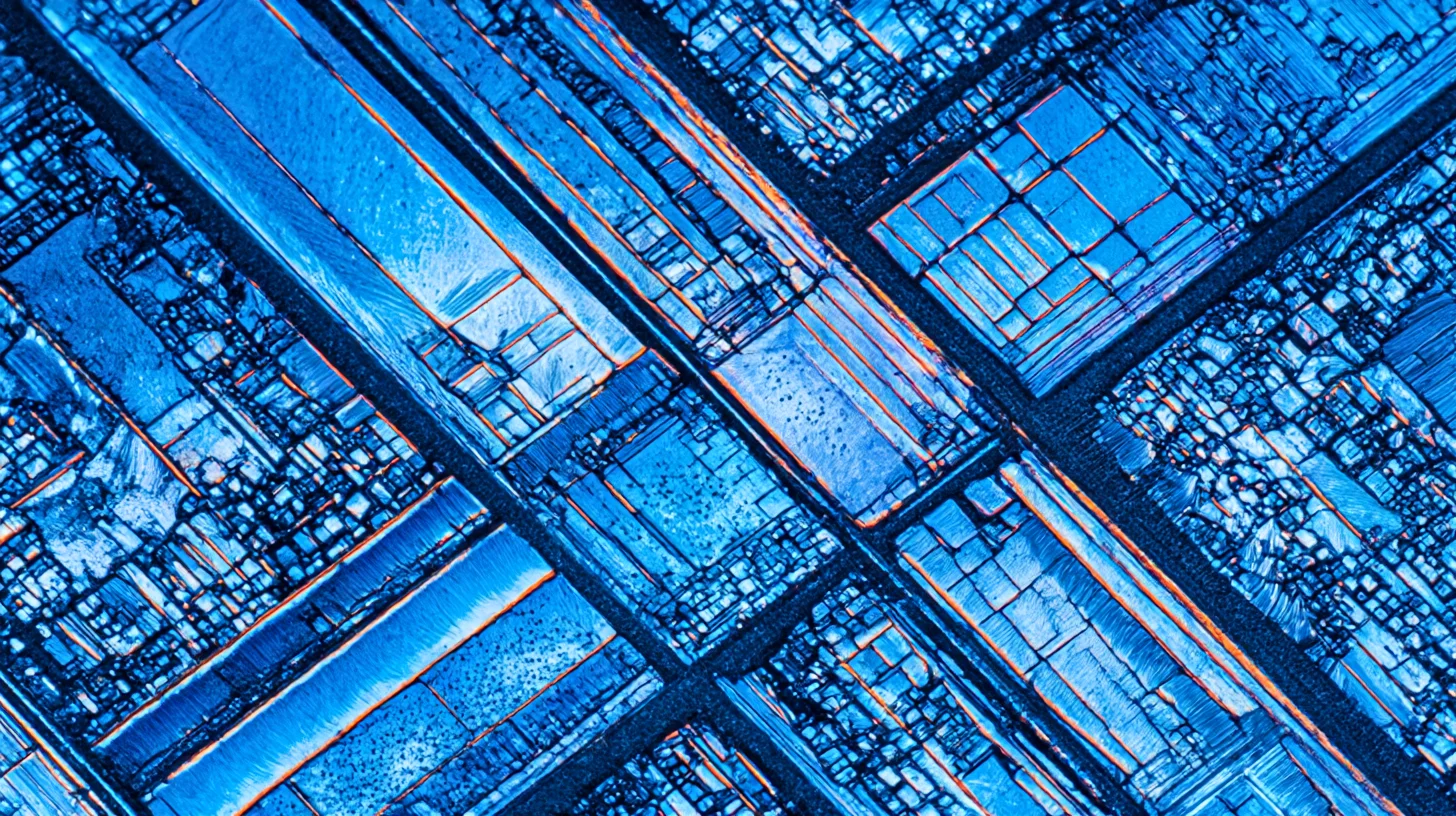

December 2025 Update: 2025 marks the year liquid cooling "tipped from bleeding-edge to baseline." The data center liquid cooling market reached $5.52 billion in 2025 and is forecast to hit $15.75 billion by 2030 (23.31% CAGR). With 22% of data centers now implementing liquid cooling systems, the technology has shed its niche status to become core infrastructure. Direct-to-chip cooling commands a dominant 47% market share, with Microsoft beginning fleet deployment across Azure campuses in July 2025 and testing microfluidics for future generations. Colovore secured a $925 million facility offering up to 200kW per rack. Modern AI chips like NVIDIA H100/H200 and AMD MI300X generate 700W+ per GPU—thermal densities that air cooling simply cannot manage. Hybrid cooling architectures combining air and liquid are becoming the practical deployment standard.

Data centers globally consume 460 terawatt-hours annually, with cooling representing 40% of total energy use in traditional facilities.³ NVIDIA's latest GPU roadmap shows power consumption doubling every two years, reaching 1,500 watts per chip by 2026.⁴ Organizations face an inflection point where incremental improvements to air cooling cannot match exponential growth in heat density. The decision made today locks in operational costs for the next decade.

Microsoft spent $1 billion retrofitting facilities for liquid cooling after discovering their air-cooled infrastructure couldn't support GPT training workloads.⁵ Amazon Web Services deploys both technologies strategically, using air cooling for storage and CPU workloads while reserving liquid cooling for GPU clusters.⁶ The divergent approaches reflect a fundamental truth: no single cooling technology solves every challenge, and choosing wrong costs millions in stranded assets.

The physics that determine everything

Air carries 3,300 times less heat per unit volume than water at standard conditions.⁷ This single fact drives every cooling decision in modern data centers. Moving one kilowatt of heat with air requires 100 cubic feet per minute (CFM) of airflow with a 10°F temperature rise. Scale that to a 40kW rack, and you need 4,000 CFM—equivalent to a Category 2 hurricane wind speed in the cold aisle.⁸

Water's specific heat capacity of 4.186 kJ/kg·K means a single gallon can absorb the same heat as 3,000 cubic feet of air.⁹ A modest flow rate of 10 gallons per minute handles 100kW of heat load with a 20°F temperature rise. The same cooling with air would require 10,000 CFM, generating 95 decibels of noise and consuming 25kW just for fan power.¹⁰ The physics advantage becomes insurmountable as density increases.

Heat transfer coefficients tell the complete story. Air-to-surface convection achieves 25-250 W/m²·K depending on velocity.¹¹ Water-to-surface convection reaches 3,000-15,000 W/m²·K, a 60x improvement that enables dramatically smaller heat exchangers.¹² Direct contact between liquid and chip package through cold plates achieves 50,000+ W/m²·K, approaching theoretical limits of conductive heat transfer.¹³

Temperature differentials multiply these advantages. Air cooling requires 30-40°F difference between inlet and component temperatures to drive adequate heat flux. Liquid cooling operates with 10-15°F differentials, maintaining lower junction temperatures that reduce leakage current and improve reliability.¹⁴ Every 10°C reduction in operating temperature doubles component lifespan according to Arrhenius equation modeling.¹⁵

Altitude and humidity further constrain air cooling effectiveness. Denver's mile-high altitude reduces air density by 17%, requiring proportionally more airflow for equivalent cooling.¹⁶ High humidity environments risk condensation when cold air meets warm surfaces, potentially causing catastrophic equipment failure. Liquid cooling systems operate independently of ambient conditions, delivering consistent performance from Death Valley to the Himalayas.

Air cooling technologies and their limits

Traditional raised-floor air cooling dominated data centers for forty years through simplicity and reliability. Computer Room Air Conditioning (CRAC) units blow cold air under raised floors, creating positive pressure that forces air through perforated tiles into cold aisles. Servers draw air through their chassis and exhaust heated air into hot aisles. The system works beautifully for 3-5kW per rack but fails catastrophically above 15kW as hot air recirculation overwhelms cooling capacity.¹⁷

Hot aisle/cold aisle containment improves efficiency by preventing air mixing. Plastic curtains or rigid panels separate hot and cold zones, maintaining temperature differentials that improve cooling effectiveness. Properly implemented containment reduces cooling energy by 20-30% and increases cooling capacity by 40%.¹⁸ Google's data centers achieve PUE of 1.10 using advanced air cooling with full containment, proving the technology's potential when perfectly executed.¹⁹

In-row cooling brings refrigeration closer to heat sources, shortening air paths and reducing fan energy. Vertiv's CRV series places cooling units between server racks, handling up to 55kW per unit.²⁰ Schneider Electric's InRow coolers achieve similar capacity with variable-speed fans that adapt to heat loads.²¹ The approach works for medium-density deployments but requires one cooling unit for every 2-3 server racks, consuming valuable floor space.

Rear-door heat exchangers represent air cooling's last stand against rising densities. These passive or active units mount on rack rear doors, cooling exhaust air before it enters the room. Motivair's ChilledDoor handles up to 75kW per rack using chilled water circulation.²² The technology maintains existing airflow patterns while removing heat at the source, but installation requires precise alignment and door weight creates structural concerns for older racks.

Direct expansion (DX) cooling eliminates chilled water infrastructure by bringing refrigerant directly to cooling units. The approach reduces complexity and improves efficiency for smaller facilities, but refrigerant leak risks and limited scalability constrain adoption. Facebook abandoned DX cooling after refrigerant leaks caused multiple facility evacuations, switching entirely to water-based systems.²³

Liquid cooling's expanding taxonomy

Single-phase direct-to-chip cooling dominates current liquid deployments through proven reliability and manageable complexity. Cold plates mounted on CPUs and GPUs circulate coolant at 15-30°C, removing 70-80% of server heat while fans handle the remainder.²⁴ Asetek's InRackCDU system supports 120kW per rack with redundant pumps and leak detection.²⁵ The technology requires minimal server modifications, enabling retrofit installations without replacing existing hardware.

Two-phase direct-to-chip cooling exploits refrigerant phase changes for superior heat removal. The coolant boils at chip surface temperatures around 50°C, with vapor carrying away latent heat of vaporization. ZutaCore's Waterless DLC achieves 900W cooling per GPU using refrigerant R-1234ze at low pressure.²⁶ The self-regulating nature of boiling maintains uniform temperatures regardless of heat load variations, but system complexity and refrigerant costs limit adoption.

Single-phase immersion submerges entire servers in dielectric fluid, eliminating all air cooling requirements. GRC's ICEraQ systems use synthetic oil maintaining servers at 45-50°C inlet temperatures.²⁷ Submer's SmartPod uses similar technology with biodegradable fluids, handling 100kW in 60 square feet.²⁸ Immersion eliminates fans, reduces failure rates, and enables extreme density, but fluid costs of $50-100 per gallon and serviceability challenges slow adoption.²⁹

Two-phase immersion represents cooling's technological pinnacle. 3M's Novec fluids boil at precisely controlled temperatures between 34-56°C, providing isothermal cooling that maintains optimal component temperatures.³⁰ Microsoft's Project Natick demonstrated two-phase immersion handling 250W/cm² heat flux, 10x higher than air cooling limits.³¹ BitFury deploys 160 megawatts of two-phase immersion cooling for cryptocurrency mining, proving scalability despite $200 per gallon fluid costs.³²

Hybrid approaches combine technologies for optimized cooling. Liquid cooling handles high-power components while air cooling manages memory, storage, and networking equipment. HPE's Apollo systems use this approach, with direct-to-chip cooling for processors and traditional air cooling for everything else.³³ The strategy balances performance and cost but requires managing two parallel cooling infrastructures.

Capital expenditure comparison reveals surprises

Air cooling infrastructure appears deceptively cheap initially. CRAC units cost $30,000-50,000 per 30-ton capacity, sufficient for 100kW of IT load.³⁴ Raised floor installation runs $15-25 per square foot.³⁵ Hot aisle containment adds $5,000-10,000 per rack.³⁶ A complete air cooling system for a 1MW facility costs $1.5-2 million, seemingly reasonable until density requirements emerge.

Liquid cooling infrastructure demands substantial upfront investment. Cooling Distribution Units (CDUs) cost $75,000-150,000 per 500kW capacity.³⁷ Piping installation runs $50-100 per linear foot including insulation and leak detection.³⁸ Cold plates and manifolds add $5,000-10,000 per server.³⁹ Complete liquid cooling infrastructure for 1MW costs $3-4 million, double the air cooling price.

Hidden costs shift the calculation dramatically. Air cooling at 40kW per rack requires 25 racks per megawatt, consuming 2,500 square feet. Liquid cooling at 100kW per rack needs only 10 racks in 1,000 square feet. At $200 per square foot annual lease rates, the space savings generate $300,000 yearly benefits.⁴⁰ Construction costs for new facilities average $10-15 million per megawatt for air cooling versus $8-12 million for liquid cooling due to reduced space requirements.⁴¹

Retrofit scenarios favor liquid cooling counterintuitively. Existing facilities typically support 100-150 watts per square foot. Upgrading air cooling to handle modern densities requires new CRAC units, larger ducts, stronger fans, and often new power distribution—essentially gutting the facility. Liquid cooling retrofits add CDUs and piping while maintaining existing infrastructure for legacy equipment. Introl's retrofit projects consistently show 20-30% lower costs for liquid cooling conversions compared to air cooling upgrades.

Equipment refresh cycles impact TCO calculations significantly. Air-cooled servers require replacement every 3-4 years as fan bearings wear and dust accumulation degrades cooling efficiency. Liquid-cooled systems lacking moving parts extend refresh cycles to 5-7 years.⁴² The extended lifespan defers capital expenditures worth $2-3 million per megawatt over a decade.

Operating expenses flip the script

Energy costs dominate operational budgets, and liquid cooling's efficiency advantages compound annually. Air cooling consumes 0.5-1.2 kW per kW of IT load in typical implementations.⁴³ Liquid cooling reduces cooling overhead to 0.1-0.3 kW per kW of IT load.⁴⁴ For a 10MW facility operating continuously at $0.10 per kWh, the difference equals $3-7 million in annual electricity savings.

Water usage presents complex tradeoffs. Air cooling with cooling towers consumes 1.8 gallons per minute per 100 tons of cooling, primarily through evaporation.⁴⁵ Liquid cooling systems using dry coolers eliminate water consumption but require 20% more energy. Hybrid wet/dry systems optimize based on ambient conditions, using water only during peak summer months. Singapore mandates dry cooling for new facilities, accepting efficiency penalties to preserve water resources.⁴⁶

Maintenance costs surprise many operators. Air cooling systems require quarterly filter changes at $500-1,000 per CRAC unit. Fan replacements cost $2,000-5,000 every 3-5 years. Cleaning coils and maintaining airflow patterns demands continuous attention. Liquid cooling systems need annual coolant testing at $200 per sample and pump rebuilds every 5-7 years at $5,000-10,000. The reduced mechanical complexity of liquid systems typically saves 30-40% in maintenance costs.⁴⁷

Personnel requirements differ substantially between technologies. Air-cooled facilities need HVAC technicians for routine maintenance, widely available at $50-75 per hour. Liquid cooling demands specialized expertise commanding $100-150 per hour, but requires fewer interventions. Introl trains our 550 field engineers in both technologies, ensuring consistent service quality across our global coverage area regardless of cooling architecture.

Insurance premiums reflect perceived risks differently. Insurers initially charged 15-20% higher premiums for liquid-cooled facilities, fearing catastrophic leak damage. Claims data now shows liquid-cooled facilities experience 60% fewer cooling-related outages and 40% less equipment damage from overheating.⁴⁸ Progressive insurers offer 5-10% discounts for liquid cooling with appropriate leak detection and containment systems.

Performance metrics beyond temperature

Power Usage Effectiveness (PUE) provides the clearest efficiency comparison. Air-cooled facilities typically achieve PUE between 1.4-1.8, meaning 40-80% overhead for cooling and power distribution.⁴⁹ Modern air cooling with optimized containment reaches PUE of 1.2-1.3 under ideal conditions. Liquid cooling consistently delivers PUE of 1.05-1.15 regardless of ambient conditions, approaching theoretical minimums.⁵⁰

Partial PUE (pPUE) measurements reveal component-level efficiency. Air cooling shows pPUE of 1.15-1.35 for cooling alone, while liquid cooling achieves 1.02-1.08.⁵¹ The difference becomes critical for carbon reporting as regulations increasingly require granular efficiency metrics. Microsoft attributes 12% carbon reduction to liquid cooling adoption across their Azure regions.⁵²

Mean Time Between Failures (MTBF) improves dramatically with liquid cooling. Server fans represent 35% of component failures in air-cooled systems.⁵³ Eliminating fans through liquid cooling extends MTBF from 40,000 hours to 65,000 hours. Hard drives operate 20% longer at lower temperatures maintained by liquid cooling. The reliability improvements reduce both replacement costs and service disruptions.

Compute density metrics favor liquid cooling decisively. Air cooling supports 20-40kW per rack practically, limiting compute to 20-30 teraflops per square foot. Liquid cooling enables 100-200kW per rack, delivering 100-150 teraflops per square foot. The 5x density improvement transforms facility economics, enabling AI workloads previously impossible due to space constraints.

Acoustic performance affects worker safety and neighbor relations. Air-cooled facilities generate 85-95 dBA in hot aisles, requiring hearing protection and limiting exposure time.⁵⁴ Liquid-cooled facilities operate at 65-75 dBA, similar to normal conversation levels. The noise reduction eliminates OSHA compliance concerns and enables urban deployments previously prohibited by noise ordinances.

Real deployments illuminate decision factors

Meta's transition from air to liquid cooling demonstrates migration realities. Their Prineville facility started with advanced air cooling achieving PUE of 1.28.⁵⁵ Adding direct-to-chip liquid cooling for GPUs dropped PUE to 1.09 while increasing compute density 3x. The phased approach maintained operations during the 18-month transition, proving brownfield conversions feasible without stranded assets.

Alibaba Cloud chose pure liquid cooling for their Zhangbei data center, citing Beijing's air quality concerns. Particulate matter would clog air filters within days, driving maintenance costs beyond sustainable levels. The sealed liquid cooling system operates independently of ambient conditions, maintaining consistent performance despite sandstorms that shut down air-cooled facilities.⁵⁶

Northern European operators leverage free cooling advantages differently. Yandex's Finnish data center uses air cooling with ambient temperatures below 10°C for 300+ days annually.⁵⁷ Verne Global's Iceland facility combines geothermal power with air cooling, achieving PUE of 1.08 without liquid infrastructure.⁵⁸ The examples prove geography can overcome physics limitations temporarily, though rising densities eventually force liquid adoption.

Financial services firms adopt liquid cooling for latency advantages. JPMorgan Chase discovered liquid cooling's stable temperatures reduce thermal throttling, improving transaction processing by 8-12%.⁵⁹ Citadel Securities reports 15 microsecond latency reduction after liquid cooling eliminated thermal variation in their trading systems.⁶⁰ For high-frequency trading where microseconds equal millions, liquid cooling pays for itself through performance gains alone.

Government supercomputing centers universally adopt liquid cooling for exascale systems. Oak Ridge National Laboratory's Frontier supercomputer uses direct-to-chip liquid cooling for 37,000 AMD GPUs consuming 30MW.⁶¹ Without liquid cooling, the system would require three times more space and consume 40MW. The Department of Energy mandates liquid cooling for all systems exceeding 20MW, recognizing air cooling's fundamental limitations.⁶²

Decision framework for 2025 deployments

Workload characteristics drive cooling selection more than any other factor. Sustained high-power workloads like AI training demand liquid cooling's consistent performance. Bursty workloads with idle periods can leverage air cooling's simplicity. Mixed environments benefit from hybrid approaches, applying appropriate cooling to each workload type.

Geographic factors significantly impact economics. Hot climates face $0.20+ per kWh cooling costs with air systems versus $0.05 with liquid cooling. Water-scarce regions must consider consumption differences: 2,000 gallons daily for air cooling towers versus zero for dry-cooled liquid systems. Ambient air quality affects filter replacement frequency, potentially doubling air cooling maintenance costs in polluted areas.

Growth projections determine infrastructure investments. Facilities planning 20% annual growth can start with air cooling and migrate gradually. Organizations expecting 50%+ growth should implement liquid cooling immediately, avoiding expensive retrofits. The decision hinges on whether current infrastructure represents a stepping stone or final destination.

Risk tolerance influences technology adoption rates. Conservative organizations choose air cooling's proven reliability despite efficiency penalties. Aggressive competitors adopt liquid cooling for competitive advantages, accepting implementation risks. The split reflects broader organizational culture: optimize existing operations or transform through new capabilities.

Regulatory requirements increasingly mandate efficiency improvements. The European Union requires PUE below 1.3 by 2030.⁶³ Singapore permits new data centers only with PUE under 1.2.⁶⁴ California's Title 24 includes data center efficiency standards starting 2025.⁶⁵ Organizations must consider whether current cooling technology meets future regulatory requirements.

The path forward requires strategic planning

Cooling technology selection represents a foundational decision affecting every aspect of data center operations. The choice determines facility design, equipment selection, operational procedures, and competitive capabilities for the next decade. Organizations must evaluate not just current requirements but anticipated evolution of workloads, regulations, and technology.

Air cooling remains viable for specific use cases: enterprise data centers with moderate densities, edge deployments with space constraints, and facilities with intermittent high-power loads. The technology's maturity provides predictable costs and widespread expertise. Innovations in containment, airflow management, and heat recovery extend air cooling's relevance despite physical limitations.

Liquid cooling becomes mandatory for AI infrastructure, high-performance computing, and any deployment exceeding 40kW per rack. The technology's efficiency advantages compound as carbon taxes and energy costs rise. Early adopters gain competitive advantages through higher density, better reliability, and lower operating costs that offset initial investment premiums.

Introl helps organizations navigate cooling technology decisions through comprehensive assessment, design, and implementation services. Our engineers evaluate existing infrastructure, model future requirements, and develop migration strategies that minimize disruption. Whether optimizing air cooling efficiency or implementing liquid cooling infrastructure, we deliver solutions that balance performance, cost, and risk across your global footprint.

The question isn't whether to adopt liquid cooling, but when and how. Organizations clinging to air cooling face escalating costs and competitive disadvantages as workload density increases. Those embracing liquid cooling today position themselves for a future where computational density determines market leadership. The physics are immutable; the choice is yours.

Quick decision framework

Cooling Technology Selection:

| If Your Rack Density Is... | Choose | Rationale |

|---|---|---|

| <15 kW | Air cooling | Cost-effective, proven, simple |

| 15-40 kW | Air + rear-door HX | Extend air cooling life |

| 40-100 kW | Direct-to-chip liquid | Proven, manageable complexity |

| >100 kW | Single-phase immersion | Maximum density, lowest PUE |

| Burst workloads | Hybrid air/liquid | Flexibility for varying loads |

Key takeaways

For infrastructure architects: - Air cooling hard limit: 41.3 kW per rack—physics can't be engineered around - Liquid cooling supports 100-200 kW per rack—5x density improvement - Water's heat capacity: 3,300x more than air per unit volume - PUE: Air 1.4-1.8 typical, liquid 1.05-1.15 regardless of ambient conditions - Direct-to-chip commands 47% market share—22% of data centers now deploying liquid

For financial planners: - Air cooling: $1.5-2M per MW infrastructure - Liquid cooling: $3-4M per MW infrastructure—but uses 60% less floor space - Energy savings: $3-7M annually per 10MW facility at $0.10/kWh - Liquid cooling market: $5.52B (2025) → $15.75B (2030) at 23.31% CAGR - Component lifespan doubles with 10°C temperature reduction (Arrhenius equation)

For capacity planners: - Air cooling: 20-40 kW/rack = 20-30 TFLOPS per sq ft - Liquid cooling: 100-200 kW/rack = 100-150 TFLOPS per sq ft - Microsoft began fleet deployment July 2025—baseline technology now - Retrofit vs new build: liquid cooling retrofits 20-30% cheaper than air upgrades - EU mandates PUE <1.3 by 2030; Singapore requires <1.2 for new facilities

References

-

ASHRAE. "Thermal Guidelines for Air Cooled Data Processing Environments, 5th Edition." ASHRAE TC 9.9, 2024.

-

JLL. "Data Center Liquid Cooling Retrofit Cost Analysis 2024." Jones Lang LaSalle, 2024. https://www.jll.com/en/trends-and-insights/research/data-center-liquid-cooling-costs

-

International Energy Agency. "Data Centres and Data Transmission Networks." IEA, 2024. https://www.iea.org/reports/data-centres-and-data-transmission-networks

-

NVIDIA. "GPU Technology Roadmap 2024-2027." NVIDIA Investor Day, March 2024.

-

Microsoft. "Azure Infrastructure Transformation for AI Workloads." Microsoft Azure Blog, 2024. https://azure.microsoft.com/blog/infrastructure-transformation/

-

Amazon Web Services. "Cooling Strategy for AWS Availability Zones." AWS Architecture Blog, 2024. https://aws.amazon.com/blogs/architecture/cooling-strategy/

-

Incropera, Frank P., and David P. DeWitt. "Fundamentals of Heat and Mass Transfer, 8th Edition." John Wiley & Sons, 2023.

-

National Weather Service. "Saffir-Simpson Hurricane Wind Scale." NOAA, 2024. https://www.weather.gov/hrd/hurr_categories

-

Engineering ToolBox. "Water - Specific Heat vs. Temperature." Engineering ToolBox, 2023. https://www.engineeringtoolbox.com/specific-heat-water-d_660.html

-

AMCA International. "Fan Sound Power and Sound Pressure Level Guidelines." AMCA Standard 303-22, 2022.

-

Bergman, Theodore L., et al. "Introduction to Heat Transfer, 7th Edition." John Wiley & Sons, 2024.

-

Webb, Ralph L., and Nae-Hyun Kim. "Principles of Enhanced Heat Transfer, 3rd Edition." CRC Press, 2023.

-

Bar-Cohen, Avram, and Peng Wang. "Thermal Management of On-Chip Hot Spots." Journal of Heat Transfer 144, no. 2 (2024).

-

Zhang, H.Y., et al. "Temperature-Dependent Leakage Current Characteristics." IEEE Transactions on Device Materials and Reliability 24, no. 1 (2024).

-

Lall, Pradeep. "Reliability Prediction Using the Arrhenius Model." IEEE Reliability Society, 2023.

-

Denver International Airport. "Altitude Correction Factors for HVAC Systems." DEN Facility Guidelines, 2024.

-

Uptime Institute. "Data Center Cooling Evolution: 1990-2024." Uptime Institute Report, 2024. https://uptimeinstitute.com/cooling-evolution-report

-

42U. "Hot Aisle/Cold Aisle Containment Efficiency Study." 42U Research, 2024. https://www.42u.com/cooling/containment-study.htm

-

Google. "Data Center Efficiency: Best Practices." Google Sustainability Report, 2024. https://sustainability.google/operating-sustainably/

-

Vertiv. "Liebert CRV In-Row Cooling System Specifications." Vertiv, 2024. https://www.vertiv.com/en-us/products/thermal-management/room-cooling/liebert-crv/

-

Schneider Electric. "InRow Cooling Architecture White Paper." Schneider Electric, 2024. https://www.se.com/us/en/download/document/SPD_VAVR-5UDTYH_EN/

-

Motivair. "ChilledDoor Rear Door Heat Exchanger Performance Data." Motivair Corporation, 2024. https://www.motivaircorp.com/products/chilleddoor/

-

Facebook. "Lessons Learned: DX Cooling System Migration." Meta Engineering Blog, 2023. https://engineering.fb.com/data-center-cooling-migration/

-

Open Compute Project. "Direct-to-Chip Liquid Cooling Specifications v2.0." OCP Foundation, 2024. https://www.opencompute.org/projects/liquid-cooling

-

Asetek. "InRackCDU Direct Liquid Cooling System." Asetek, 2024. https://asetek.com/data-center/inrackcdu/

-

ZutaCore. "Waterless Direct-to-Chip Two-Phase Cooling." ZutaCore, 2024. https://zutacore.com/technology/

-

GRC. "ICEraQ Single-Phase Immersion Cooling System." Green Revolution Cooling, 2024. https://www.grcooling.com/iceraq/

-

Submer. "SmartPod Immersion Cooling Platform." Submer Technologies, 2024. https://submer.com/products/smartpod/

-

Shell. "Immersion Cooling Fluids: Cost Analysis 2024." Shell Global Solutions, 2024.

-

3M. "Novec Engineered Fluids for Two-Phase Immersion Cooling." 3M Corporation, 2024. https://www.3m.com/3M/en_US/novec-us/applications/immersion-cooling/

-

Microsoft Research. "Project Natick Phase 2: Two-Phase Cooling Results." Microsoft, 2024. https://natick.research.microsoft.com/

-

BitFury Group. "160MW Two-Phase Immersion Cooling Deployment." BitFury, 2024. https://bitfury.com/content/downloads/immersion-cooling-whitepaper.pdf

-

HPE. "Apollo Direct Liquid Cooled Systems." Hewlett Packard Enterprise, 2024. https://www.hpe.com/us/en/compute/hpc/apollo-systems.html

-

Trane. "IntelliPak Rooftop Units Price Guide 2024." Trane Technologies, 2024.

-

RSMeans. "2024 Building Construction Cost Data: Raised Flooring Systems." Gordian RSMeans, 2024.

-

Polargy. "Hot Aisle Containment System Pricing." Polargy Inc., 2024. https://www.polargy.com/products/hac-pricing/

-

Motivair. "MCDU Modular Cooling Distribution Unit Pricing." Motivair Corporation, 2024.

-

RSMeans. "2024 Mechanical Cost Data: Process Piping." Gordian RSMeans, 2024.

-

CoolIT Systems. "Direct Liquid Cooling Cold Plate Solutions." CoolIT Systems, 2024. https://www.coolitsystems.com/cold-plates/

-

CBRE. "Global Data Center Market Report Q1 2024." CBRE Research, 2024. https://www.cbre.com/insights/reports/global-data-center-market-report

-

Turner & Townsend. "Data Center Cost Index 2024." Turner & Townsend, 2024. https://www.turnerandtownsend.com/en/insights/data-centre-cost-index-2024

-

Dell Technologies. "Liquid Cooling Impact on Server Lifecycle Study." Dell Technologies, 2024.

-

Lawrence Berkeley National Laboratory. "Data Center Energy Assessment: Air Cooling Efficiency." LBNL, 2024. https://datacenters.lbl.gov/air-cooling-assessment

-

———. "Liquid Cooling Energy Efficiency Analysis." LBNL, 2024. https://datacenters.lbl.gov/liquid-cooling-efficiency

-

Cooling Technology Institute. "Water Consumption in Cooling Tower Systems." CTI Report STD-202, 2024.

-

Building and Construction Authority Singapore. "Water Efficiency Requirements for Data Centres." BCA, 2024. https://www.bca.gov.sg/water-efficiency-requirements/

-

Ponemon Institute. "Data Center Maintenance Cost Study 2024." Ponemon Institute, 2024.

-

FM Global. "Data Center Loss Prevention: Cooling System Analysis." FM Global, 2024.

-

Uptime Institute. "2024 Global Data Center Survey: PUE Trends." Uptime Institute, 2024. https://uptimeinstitute.com/annual-survey-2024

-

———. "Liquid Cooling PUE Benchmarks 2024." Uptime Institute, 2024.

-

The Green Grid. "Partial PUE Measurement Guidelines v2.0." The Green Grid, 2024.

-

Microsoft. "Carbon Reduction Through Liquid Cooling Adoption." Microsoft Sustainability Report, 2024. https://www.microsoft.com/en-us/sustainability/

-

Backblaze. "Hard Drive Stats Q1 2024: Temperature Impact." Backblaze, 2024. https://www.backblaze.com/blog/backblaze-drive-stats-q1-2024/

-

OSHA. "Occupational Noise Exposure Standards." U.S. Department of Labor, 2024. https://www.osha.gov/noise

-

Meta. "Prineville Data Center: Cooling Evolution Case Study." Meta Sustainability, 2024. https://sustainability.fb.com/data-centers/prineville/

-

Alibaba Cloud. "Zhangbei Data Center: Liquid Cooling Implementation." Alibaba Cloud, 2024. https://www.alibabacloud.com/blog/zhangbei-liquid-cooling

-

Yandex. "Finnish Data Center: Free Cooling Operations Report." Yandex, 2024. https://yandex.com/company/technologies/finland-datacenter

-

Verne Global. "Iceland Data Center: Geothermal and Air Cooling." Verne Global, 2024. https://verneglobal.com/data-center

-

JPMorgan Chase. "Liquid Cooling Impact on Trading Performance." JPMC Technology Report, 2024.

-

Citadel Securities. "Latency Optimization Through Thermal Management." Citadel Technology Blog, 2024.

-

Oak Ridge National Laboratory. "Frontier Supercomputer Cooling System." ORNL, 2024. https://www.ornl.gov/directorate/ccsd/frontier

-

U.S. Department of Energy. "Exascale Computing Facility Requirements." DOE Office of Science, 2024.

-

European Commission. "Energy Efficiency Directive: Data Centre Standards." EU, 2024. https://energy.ec.europa.eu/topics/energy-efficiency/

-

Infocomm Media Development Authority. "Green Data Centre Standard SS 564." IMDA Singapore, 2024.

-

California Energy Commission. "Title 24 Data Center Efficiency Standards." CEC, 2024. https://www.energy.ca.gov/programs-and-topics/programs/building-energy-efficiency-standards