NVIDIA CEO Jensen Huang dropped a bombshell at GTC 2025, sending infrastructure teams scrambling for their calculators: the Vera Rubin platform will push data center racks to 600 kilowatts by 2027.¹ The announcement marks a fundamental shift in how data centers operate, forcing a complete rethinking of power delivery, cooling systems, and physical infrastructure that has remained essentially unchanged for decades.

The Vera Rubin platform represents NVIDIA's most ambitious leap yet. This multi-component system combines the custom Vera CPU, the next-generation Rubin GPU, and the specialized Rubin CPX (Context Processing eXtension) accelerator, designed specifically for million-token AI workloads.² Unlike the incremental improvements typical of GPU generations, the Vera Rubin NVL144 CPX variant delivers 7.5x the AI performance of the current Blackwell GB300 systems while fundamentally changing how GPUs are packaged, cooled, and deployed.³

"We're the first technology company in history that announced four generations of something," Huang explained to Data Center Dynamics, laying out NVIDIA's roadmap through 2028.⁴ The transparency serves a critical purpose: giving infrastructure providers, data center operators, and companies like Introl the lead time needed to prepare for what amounts to a complete reimagining of AI infrastructure.

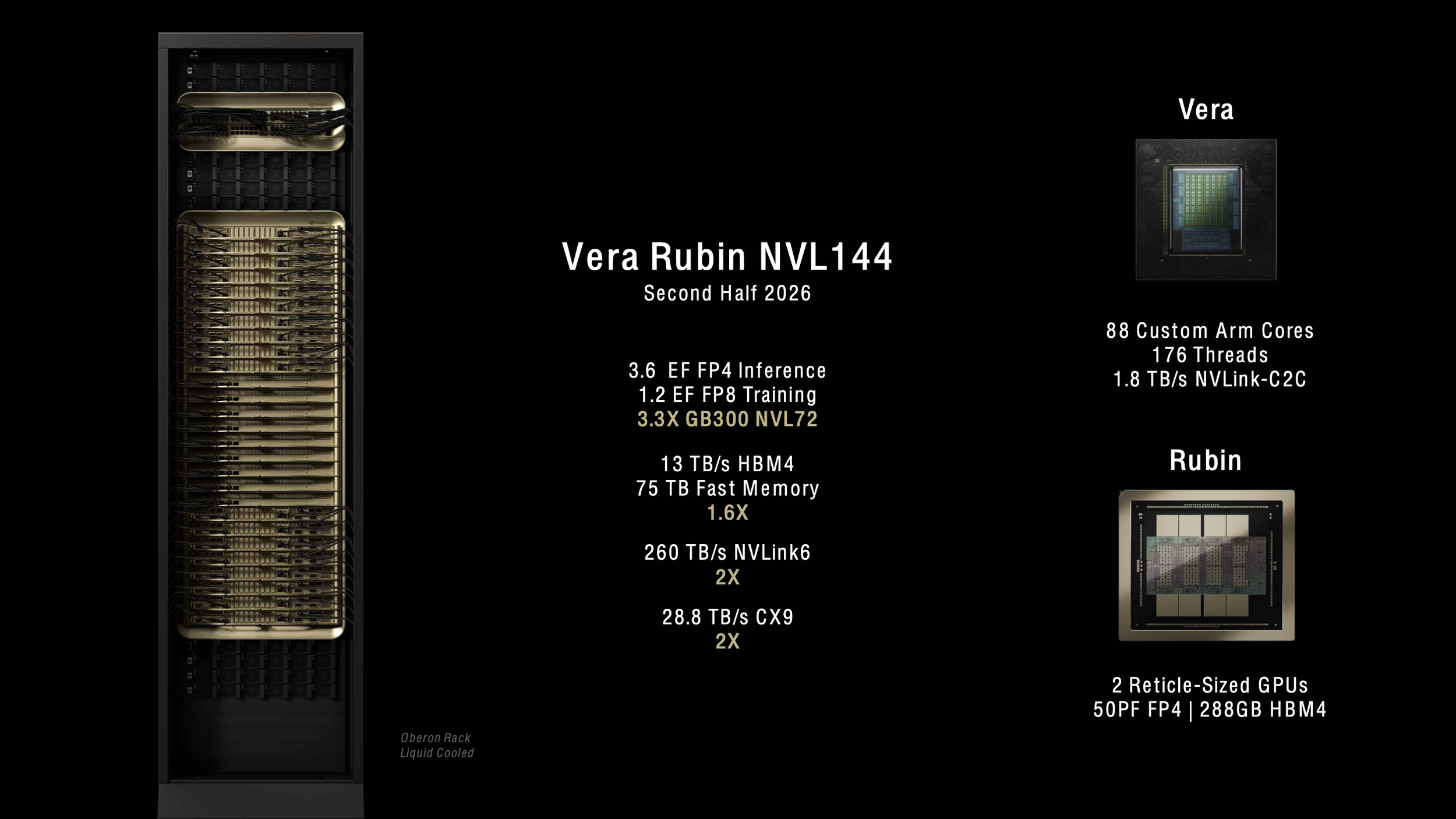

[caption id="" align="alignnone" width="2522"] NVIDIA Vera Rubin NVL144 platform specifications showing 3.6 exaflops of FP4 inference performance and 3.3x improvement over GB300 NVL72, arriving second half 2026. [/caption]

NVIDIA Vera Rubin NVL144 platform specifications showing 3.6 exaflops of FP4 inference performance and 3.3x improvement over GB300 NVL72, arriving second half 2026. [/caption]

## The architecture revolution starts with custom silicon.

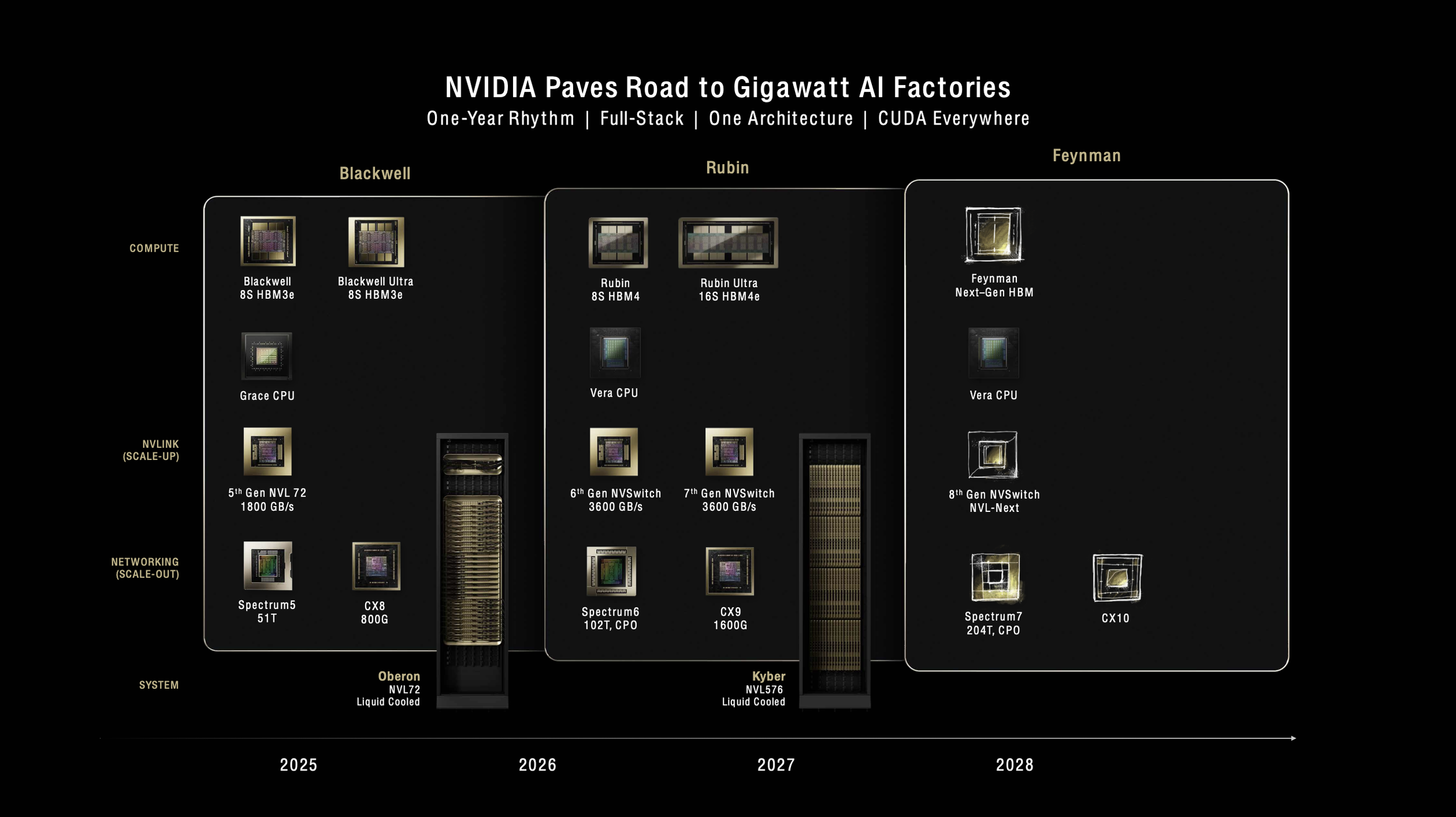

[caption id="" align="alignnone" width="2520"] NVIDIA's complete roadmap from Blackwell through Feynman, showing the evolution from Oberon to Kyber rack architectures supporting up to 600kW power consumption. [/caption]

NVIDIA's complete roadmap from Blackwell through Feynman, showing the evolution from Oberon to Kyber rack architectures supporting up to 600kW power consumption. [/caption]

The Vera CPU marks NVIDIA's departure from off-the-shelf ARM designs, featuring 88 custom ARM cores with simultaneous multithreading, which enables 176 logical processors.⁵ NVIDIA calls the custom cores "Olympus," and the design delivers twice the performance of the Grace CPU used in current Blackwell systems.⁶ Each Vera CPU connects to Rubin GPUs through a 1.8 TB/s NVLink C2C interface, enabling unprecedented bandwidth between compute elements.⁷

The standard Rubin GPU pushes boundaries with 288GB of HBM4 memory per package, maintaining the same capacity as the Blackwell Ultra B300 but increasing from 8 TB/s to 13 TB/s of memory bandwidth.⁸ Each Rubin package contains two reticle-limited GPU dies, though NVIDIA has changed its counting methodology—what Blackwell called one GPU (two dies), Rubin calls two GPUs.⁹ The change reflects the increasing complexity of multi-die architectures and helps customers better understand the actual compute resources in each system.

The most innovative element arrives in the form of Rubin CPX, a purpose-built accelerator for massive-context processing. The monolithic design delivers 30 petaFLOPs of NVFP4 compute with 128GB of cost-efficient GDDR7 memory, specifically optimized for attention mechanisms in transformer models.¹⁰ The CPX achieves 3x faster attention capabilities compared to GB300 NVL72 systems, enabling AI models to process million-token contexts—equivalent to an hour of video or entire codebases—without performance degradation.¹¹

Deployment demands a complete infrastructure overhaul.

The standard Vera Rubin NVL144 system, scheduled to arrive in the second half of 2026, maintains compatibility with existing GB200/GB300 infrastructure, utilizing the familiar Oberon rack architecture.¹² The system packs 144 GPU dies (72 packages), 36 Vera CPUs, and delivers 3.6 exaFLOPS of FP4 inference performance—a 3.3x improvement over Blackwell Ultra.¹³ Power consumption stays manageable at approximately 120-130kW per rack, similar to current deployments.

The Vera Rubin NVL144 CPX variant takes performance further, integrating 144 Rubin CPX GPUs alongside 144 standard Rubin GPUs and 36 Vera CPUs to deliver eight exaFLOPs of NVFP4 compute—that 7.5x improvement over GB300 NVL72—with 100TB of high-speed memory and 1.7 PB/s of memory bandwidth in a single rack.¹⁴

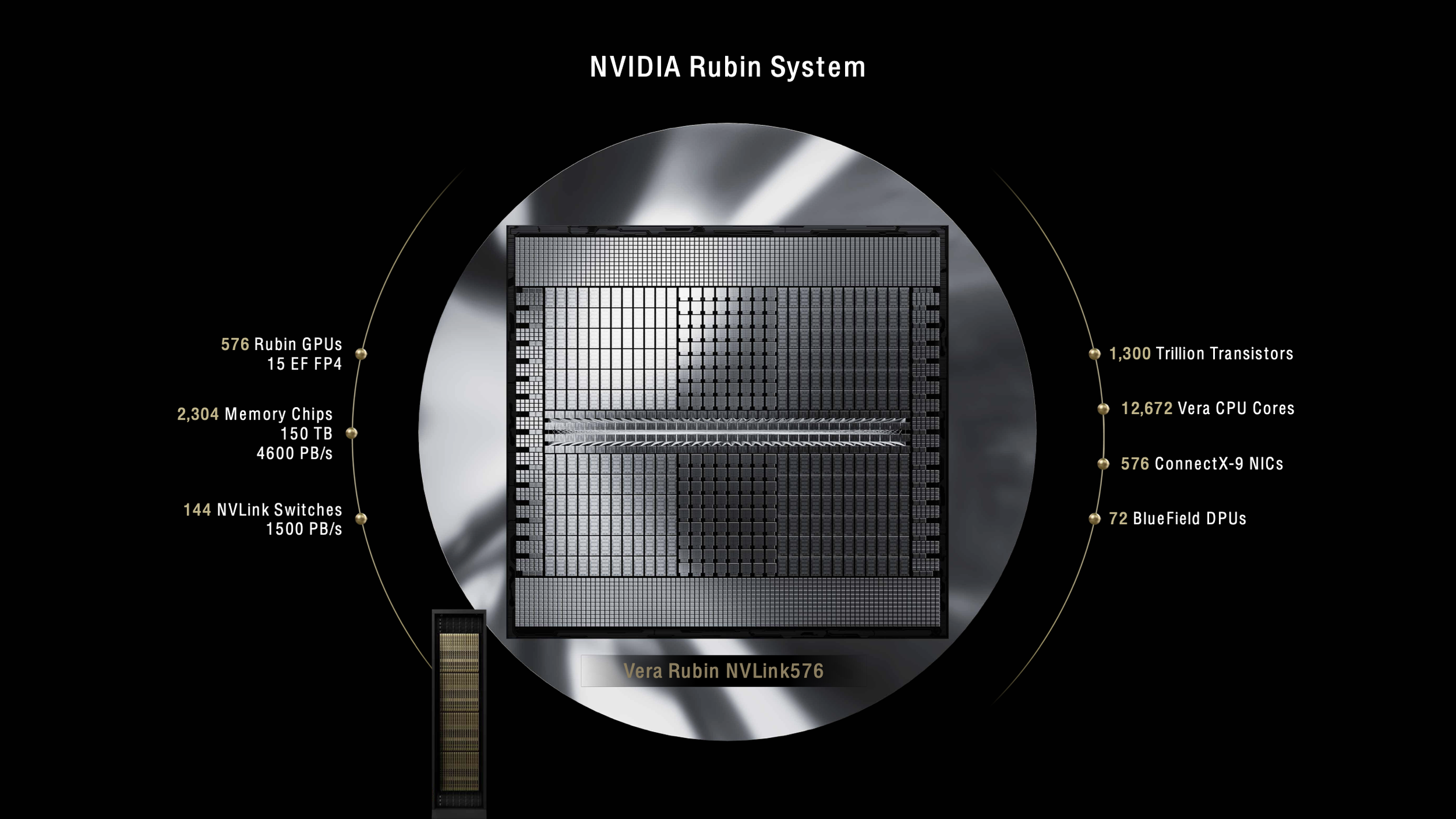

Everything changes with Rubin Ultra and the Kyber rack architecture in 2027. The NVL576 system crams 576 GPU dies into a single rack, consuming 600kW of power—five times current systems.¹⁵ The Kyber design rotates compute blades 90 degrees into a vertical orientation, packing four pods of 18 blades each into the rack.¹⁶ Each blade houses eight Rubin Ultra GPUs alongside Vera CPUs, achieving densities that seemed impossible just years ago.

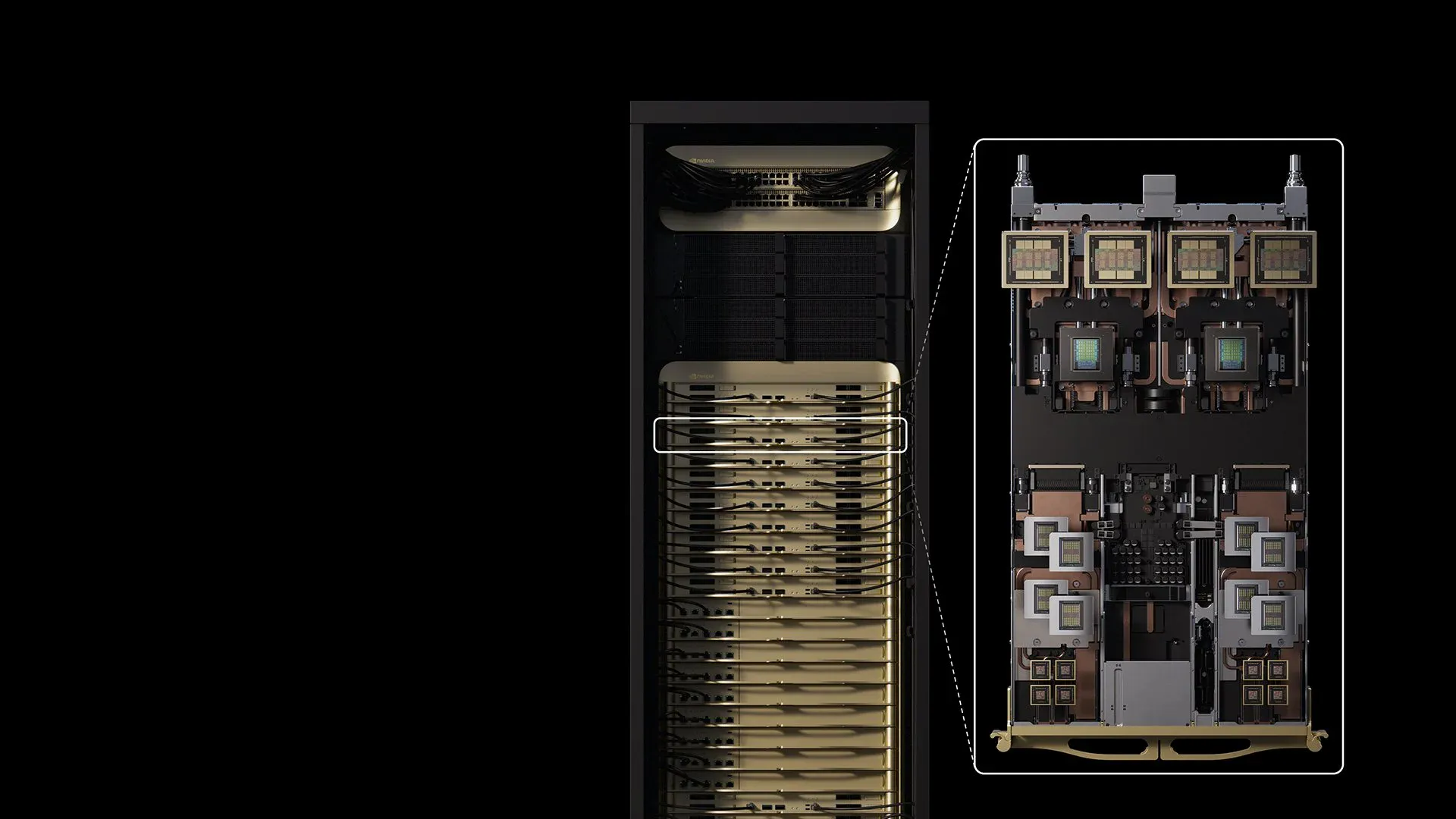

[caption id="" align="alignnone" width="2522"] Current NVIDIA Blackwell System with 72 GPUs delivering 1.1 exaflops [/caption]

Current NVIDIA Blackwell System with 72 GPUs delivering 1.1 exaflops [/caption]

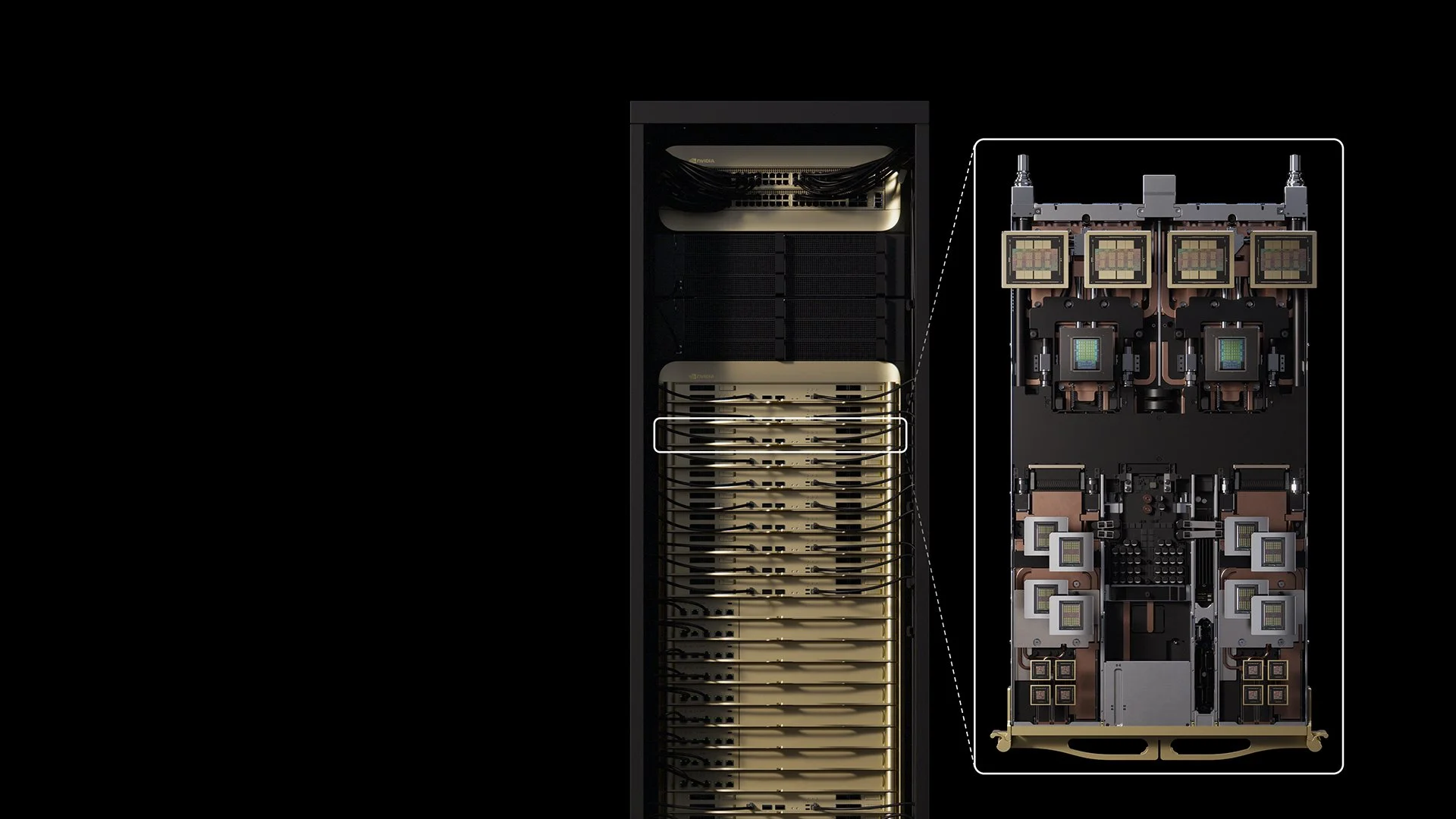

[caption id="" align="alignnone" width="2524"] Future NVIDIA Rubin System scaling to 576 GPUs and 15 exaflops in a single 600kW rack [/caption]

Future NVIDIA Rubin System scaling to 576 GPUs and 15 exaflops in a single 600kW rack [/caption]

Cooling these systems requires complete liquid immersion with zero fans—a departure from current systems that still use some air cooling for auxiliary components.¹⁷ CoolIT Systems and Accelsius have already demonstrated cooling solutions capable of handling 250kW racks with 40°C inlet water temperatures, validating the technology path toward 600kW deployments.¹⁸ The Kyber rack includes a dedicated sidecar for power and cooling infrastructure, effectively requiring two rack footprints for each 600kW system.¹⁹

Power architecture evolution enables megawatt-scale computing.

NVIDIA's transition to 800 VDC power distribution addresses fundamental physics limitations of current infrastructure. Traditional 54V in-rack distribution would require 64U of power shelves for Kyber-scale systems, leaving no room for actual compute.²⁰ The 800V architecture eliminates rack-level AC/DC conversion, improves end-to-end efficiency by up to 5%, and reduces maintenance costs by up to 70%.²¹

The new power infrastructure supports racks ranging from 100kW to over 1MW, using the same backbone, and provides the scalability needed for future generations.²² Companies deploying Vera Rubin must plan for massive electrical upgrades—a single NVL576 rack draws as much power as 400 typical homes. Data centers planning for 2027 deployments should begin infrastructure upgrades now, including utility-scale power connections and potentially on-site generation.

For infrastructure specialists like Introl, the shift creates unprecedented opportunities. The company's expertise in deploying GPU infrastructure at hyperscale, managing over 100,000 GPUs globally, and its extensive APAC presence position them perfectly to support the complex deployments Vera Rubin demands.²³ Organizations need partners who understand not just GPU deployment but the intricate dance of power, cooling, and networking required for 600kW systems.

Performance gains justify infrastructure investment.

The Vera Rubin NVL144 CPX variant showcases the platform's potential with its eight exaFLOPS of NVFP4 compute, alongside 100TB of high-speed memory and 1.7 PB/s of memory bandwidth, all in a single rack.²⁴ NVIDIA claims organizations can achieve 30x to 50x return on investment, translating to $5 billion in revenue from a $100 million capital investment.²⁵

Early adopters include Germany's Leibniz Supercomputing Centre, which is deploying the Blue Lion supercomputer with Vera Rubin to achieve 30 times more computing power than their current system.²⁶ Lawrence Berkeley National Lab's Doudna system will also run on Vera Rubin, combining simulation, data, and AI into a single platform for scientific computing.²⁷

The Rubin CPX's specialization for context processing addresses a critical bottleneck in current AI systems. Companies like Cursor, Runway, and Magic are already exploring how CPX can accelerate coding assistants and video generation applications that require processing millions of tokens simultaneously.²⁸ The ability to maintain entire codebases or hours of video in active memory fundamentally changes what AI applications can achieve.

Infrastructure challenges create market opportunities.

The leap to 600kW racks exposes harsh realities about current data center capabilities. Most facilities struggle with 40kW racks; even cutting-edge AI data centers rarely exceed 120kW. The transition requires not just new cooling systems but complete facility redesigns, from concrete floors capable of supporting massive weight loads to electrical substations sized for industrial operations.

"The question remains how many existing datacenter facilities will be able to support such a dense configuration," notes The Register, highlighting that the custom-built nature of Kyber racks means facilities need purpose-built infrastructure.²⁹ Greenfield developments in regions with surplus renewable or nuclear energy—Scandinavia, Quebec, and the UAE—will likely lead to adoption.³⁰

The timeline gives the industry breathing room but demands immediate action. Organizations planning AI infrastructure for 2027 and beyond must make decisions now about facility locations, power procurement, and cooling architecture. The three-year lead time reflects the complexity of deploying infrastructure that operates at the edge of what's physically possible.

The road beyond Vera Rubin

NVIDIA's roadmap extends beyond Vera Rubin to the Feynman architecture in 2028, likely pushing toward 1-megawatt racks.³¹ Vertiv CEO Giordano Albertazzi suggests achieving MW-scale density will require "a further revolution in the liquid cooling, and a paradigm change on the power side."³² The trajectory seems inevitable—AI workloads demand exponential increases in compute density, and the economics favor concentration over distribution.

The shift from incremental improvements to revolutionary changes in GPU infrastructure mirrors the broader AI transformation. Just as large language models jumped from billions to trillions of parameters, the infrastructure supporting them must make similar leaps. Vera Rubin represents not just faster GPUs but a fundamental rethinking of how compute infrastructure works.

For companies like Introl specializing in GPU infrastructure deployment, Vera Rubin creates a generational opportunity. Organizations need partners who can navigate the complexity of 600kW deployments, from initial planning through implementation and ongoing optimization. The companies that successfully deploy Vera Rubin will gain significant competitive advantages in AI capabilities, while those that hesitate risk losing out as the industry races toward exascale AI.

Conclusion

NVIDIA's Vera Rubin platform forces the data center industry to confront uncomfortable truths about infrastructure limitations while offering unprecedented computational capabilities. The 600kW racks of 2027 represent more than just higher power consumption—they mark a complete transformation in how AI infrastructure gets built, cooled, and operated. Organizations that start planning now, partnering with experienced infrastructure specialists who understand the complexities of next-generation deployments, will be best positioned to harness the revolutionary capabilities Vera Rubin enables.

The platform's arrival in 2026-2027 gives the industry time to prepare, but the clock is ticking. Data centers designed today must anticipate tomorrow's requirements, and Vera Rubin makes clear that tomorrow demands radical departures from conventional thinking. The companies that embrace this transformation will power the next generation of AI breakthroughs, from million-token language models to real-time video generation systems that seem like science fiction today.

References

¹ The Register. "Nvidia's Vera Rubin CPU, GPUs chart course for 600kW racks." March 19, 2025. https://www.theregister.com/2025/03/19/nvidia_charts_course_for_600kw.

² NVIDIA Newsroom. "NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference." 2025. https://nvidianews.nvidia.com/news/nvidia-unveils-rubin-cpx-a-new-class-of-gpu-designed-for-massive-context-inference.

³ Ibid.

⁴ Data Center Dynamics. "GTC: Nvidia's Jensen Huang, Ian Buck, and Charlie Boyle on the future of data center rack density." March 21, 2025. https://www.datacenterdynamics.com/en/analysis/nvidia-gtc-jensen-huang-data-center-rack-density/.

⁵ TechPowerUp. "NVIDIA Unveils Vera CPU and Rubin Ultra AI GPU, Announces Feynman Architecture." 2025. https://www.techpowerup.com/334334/nvidia-unveils-vera-cpu-and-rubin-ultra-ai-gpu-announces-feynman-architecture.

⁶ CNBC. "Nvidia announces Blackwell Ultra and Vera Rubin AI chips." March 18, 2025. https://www.cnbc.com/2025/03/18/nvidia-announces-blackwell-ultra-and-vera-rubin-ai-chips-.html.

⁷ Yahoo Finance. "Nvidia debuts next-generation Vera Rubin superchip at GTC 2025." March 18, 2025. https://finance.yahoo.com/news/nvidia-debuts-next-generation-vera-rubin-superchip-at-gtc-2025-184305222.html.

⁸ Next Platform. "Nvidia Draws GPU System Roadmap Out To 2028." June 5, 2025. https://www.nextplatform.com/2025/03/19/nvidia-draws-gpu-system-roadmap-out-to-2028/.

⁹ SemiAnalysis. "NVIDIA GTC 2025 – Built For Reasoning, Vera Rubin, Kyber, CPO, Dynamo Inference, Jensen Math, Feynman." August 4, 2025. https://semianalysis.com/2025/03/19/nvidia-gtc-2025-built-for-reasoning-vera-rubin-kyber-cpo-dynamo-inference-jensen-math-feynman/.

¹⁰ NVIDIA Newsroom. "NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference."

¹¹ Ibid.

¹² Tom's Hardware. "Nvidia announces Rubin GPUs in 2026, Rubin Ultra in 2027, Feynman also added to roadmap." March 18, 2025. https://www.tomshardware.com/pc-components/gpus/nvidia-announces-rubin-gpus-in-2026-rubin-ultra-in-2027-feynam-after.

¹³ The New Stack. "NVIDIA Unveils Next-Gen Rubin and Feynman Architectures, Pushing AI Power Limits." April 14, 2025. https://thenewstack.io/nvidia-unveils-next-gen-rubin-and-feynman-architectures-pushing-ai-power-limits/.

¹⁴ NVIDIA Newsroom. "NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference."

¹⁵ Data Center Dynamics. "Nvidia's Rubin Ultra NVL576 rack expected to be 600kW, coming second half of 2027." March 18, 2025. https://www.datacenterdynamics.com/en/news/nvidias-rubin-ultra-nvl576-rack-expected-to-be-600kw-coming-second-half-of-2027/.

¹⁶ Tom's Hardware. "Nvidia shows off Rubin Ultra with 600,000-Watt Kyber racks and infrastructure, coming in 2027." March 19, 2025. https://www.tomshardware.com/pc-components/gpus/nvidia-shows-off-rubin-ultra-with-600-000-watt-kyber-racks-and-infrastructure-coming-in-2027.

¹⁷ Data Center Dynamics. "GTC: Nvidia's Jensen Huang, Ian Buck, and Charlie Boyle on the future of data center rack density."

¹⁸ Data Center Frontier. "CoolIT and Accelsius Push Data Center Liquid Cooling Limits Amid Soaring Rack Densities." 2025. https://www.datacenterfrontier.com/cooling/article/55281394/coolit-and-accelsius-push-data-center-liquid-cooling-limits-amid-soaring-rack-densities.

¹⁹ Data Center Dynamics. "GTC: Nvidia's Jensen Huang, Ian Buck, and Charlie Boyle on the future of data center rack density."

²⁰ NVIDIA Technical Blog. "NVIDIA 800 VDC Architecture Will Power the Next Generation of AI Factories." May 20, 2025. https://developer.nvidia.com/blog/nvidia-800-v-hvdc-architecture-will-power-the-next-generation-of-ai-factories/.

²¹ Ibid.

²² Ibid.

²³ Introl. "Coverage Area." Accessed 2025. https://introl.com/coverage-area.

²⁴ NVIDIA Newsroom. "NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference."

²⁵ Ibid.

²⁶ NVIDIA Blog. "Blue Lion Supercomputer Will Run on NVIDIA Vera Rubin." June 10, 2025. https://blogs.nvidia.com/blog/blue-lion-vera-rubin/.

²⁷ Ibid.

²⁸ NVIDIA Newsroom. "NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference."

²⁹ The Register. "Nvidia's Vera Rubin CPU, GPUs chart course for 600kW racks."

³⁰ Global Data Center Hub. "Nvidia's 600kW Racks Are Here (Is Your Infrastructure Ready?)." March 23, 2025. https://www.globaldatacenterhub.com/p/issue-8-nvidias-600kw-racks-are-hereis.

³¹ TechPowerUp. "NVIDIA Unveils Vera CPU and Rubin Ultra AI GPU, Announces Feynman Architecture."

³² Data Center Dynamics. "GTC: Nvidia's Jensen Huang, Ian Buck, and Charlie Boyle on the future of data center rack density."