Introl Blog

**

Introl Blog **

NVIDIA's FP4 Inference Delivers 50x Efficiency

FP4 inference delivers 25-50x energy efficiency with 3.5x memory reduction. DeepSeek-R1 hits 250+ tokens/sec. The $0.02/token era arrives.

Malaysia's $15B AI revolution powers Southeast Asia's digital future

Malaysia secures $15B+ in AI investments, deploys region's first H100 GPU platform. Tech giants compete as Johor transforms into Southeast Asia's AI powerhouse.

Small Modular Nuclear Reactors (SMRs) Power AI: $10B Nuclear Revolution Transforms Data Centers

Tech giants commit $10B+ to small modular reactors powering AI data centers. First SMR facilities online by 2030 as nuclear meets AI's 945 TWh energy demand.

Singapore's $27B AI Revolution Powers Southeast Asia 2025

Singapore commits $1.6B government funding + $26B tech investments to become Southeast Asia's AI hub, generating 15% of NVIDIA's global revenue.

Japan's $135B AI Revolution: Quantum + GPU Infrastructure

Japan's ¥10 trillion AI push powers 2.1GW data centers, 10,000+ GPU deployments. Government-corporate $135B convergence creates Asia's quantum-AI powerhouse.

South Korea's $65 Billion AI Revolution: How Samsung and SK Hynix Lead Asia's GPU Infrastructure Boom

South Korea commits $65 billion to AI infrastructure through 2027, with Samsung and SK Hynix controlling 90% of global HBM memory. Data centers expand to 3 gigawatts, 15,000 GPU deployments, and partnerships with AWS, Microsoft, and NVIDIA transform Seoul into Asia's AI capital.

Liquid Cooling vs Air: The 50kW GPU Rack Guide (2025)

GPU racks hit 50kW thermal limits. Liquid cooling delivers 21% energy savings, 40% cost reduction. Essential guide for AI infrastructure teams facing the wall.

Indonesia's AI Revolution: How Southeast Asia's Largest Economy Became a Global AI Powerhouse

Indonesia leads global AI adoption at 92% with $10.88B market by 2030. Tech giants invest billions in infrastructure while local startups drive indigenous innovation across Southeast Asia's largest economy.

How Isambard-AI Deployed 5,448 GPUs in 4 Months: The New Blueprint for AI Infrastructure

Isambard-AI's record-breaking deployment of 5,448 NVIDIA GPUs reveals why modern AI infrastructure demands specialized expertise in liquid cooling, high-density power, and complex networking.

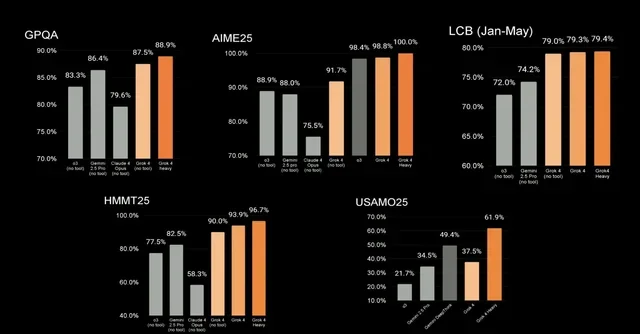

Grok 4 Just Shattered the AI Ceiling—Here's Why That Changes Everything

xAI's Grok 4 achieves unprecedented benchmark scores with its 200,000-GPU infrastructure, doubling competitors' performance on critical reasoning tests. The model's unique multi-agent approach and integration with Tesla's CFD software signals a shift from AI assistants to genuine reasoning partners. Three weeks after launch, developers report capabilities that transform how we approach complex problem-solving.

Why AI Data Centers Look Nothing Like They Did Two Years Ago

NVIDIA's power smoothing cuts grid demand 30%. Liquid cooling handles 1,600W GPUs. Smart companies see 350% ROI while others face 80% failure rates.

Building Data Centers with Sustainability in Mind: What Works

Data centers power our digital world but consume massive resources. Learn proven strategies for sustainable design—from liquid cooling and water conservation to smart site selection and green construction—that cut costs while meeting ESG goals.

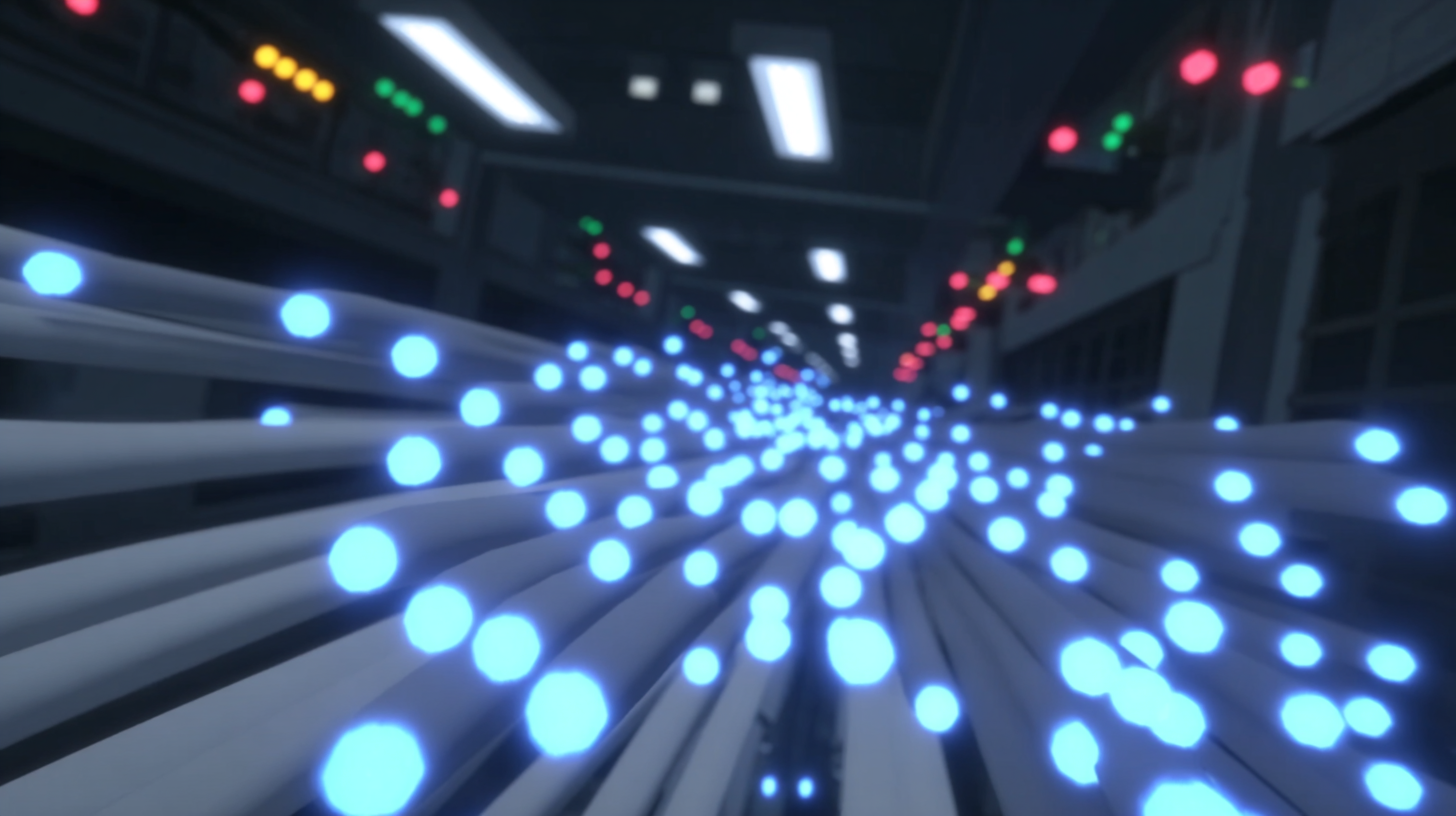

Structured Cabling vs. Liquid-Cooled Conduits: Designing for 100 kW-Plus Racks

As AI workloads push rack densities past 100 kW, data centers must master both structured cabling for data flow and liquid cooling for heat removal. Learn how to design infrastructure that keeps GPUs running at peak performance.

Why the NVIDIA GB300 NVL72 (Blackwell Ultra) Matters 🤔

NVIDIA's GB300 NVL72 delivers 1.5x more AI performance than GB200 with 72 Blackwell Ultra GPUs, 288 GB memory per GPU, and 130 TB/s NVLink bandwidth. Here's what deployment engineers need to know about power, cooling, and cabling for these 120 kW AI data center cabinets.

Scalable On-Site Staffing: Launching Critical Infrastructure at Hyperspeed

The AI boom is driving unprecedented demand for data center infrastructure, but more than half of operators face critical staffing shortages that threaten capacity growth. Introl's Workforce-as-a-Service model delivers certified technicians in days—not months—enabling hyperscale sites to launch at speed while avoiding the $5+ million daily opportunity costs of delays.

Mapping the Future: How Global Coverage Transforms AI Infrastructure Deployment

When your 50,000-GPU cluster crashes at 3 AM Singapore time, the difference between a 4-hour and 24-hour response equals months of lost research. Discover how Introl's 257 global locations transform geographic coverage into competitive advantage for AI infrastructure deployment.

H100 vs. H200 vs. B200: Choosing the Right NVIDIA GPUs for Your AI Workload

NVIDIA's H100, H200, and B200 GPUs each serve different AI infrastructure needs—from the proven H100 workhorse to the memory-rich H200 and the groundbreaking B200. We break down real-world performance, costs, and power requirements to help you choose the right GPU for your specific workload and budget.

Mitigating the Cost of Downtime in the Age of Artificial Intelligence

Learn how predictive failure analysis and remote hands contracts can save enterprises up to $500,000 per hour in downtime costs. Discover ROI strategies for protecting AI and HPC investments with a detailed case study showing 20% returns.

NVIDIA's Computex 2025 Revolution: Transforming Data Centers into AI Factories

NVIDIA CEO Jensen Huang's Computex 2025 keynote wasn't just another product launch—it was the blueprint for computing's third major revolution. As data centers transform into token-producing "AI factories," NVIDIA's latest innovations in networking, enterprise AI, and robotics are setting the stage for a new economic paradigm where computational intelligence becomes as fundamental as electricity. Dive into our comprehensive analysis of how these developments will reshape enterprise computing strategy and what it means for your infrastructure roadmap.

The Art of Digital Demolition: Decommissioning High-Performance Computing Centers with Precision and Purpose

Decommissioning an HPC data center isn't just unplugging servers – it's a high-stakes operation requiring surgical precision and military-grade planning. From sanitizing mission-critical data to extracting components worth more than luxury cars, this guide walks you through the entire process of dismantling digital fortresses without security breaches, compliance violations, or leaving money on the table. Whether you're migrating to the cloud or upgrading infrastructure, learn how to transform a potential organizational migraine into a showcase of technical excellence and environmental responsibility.