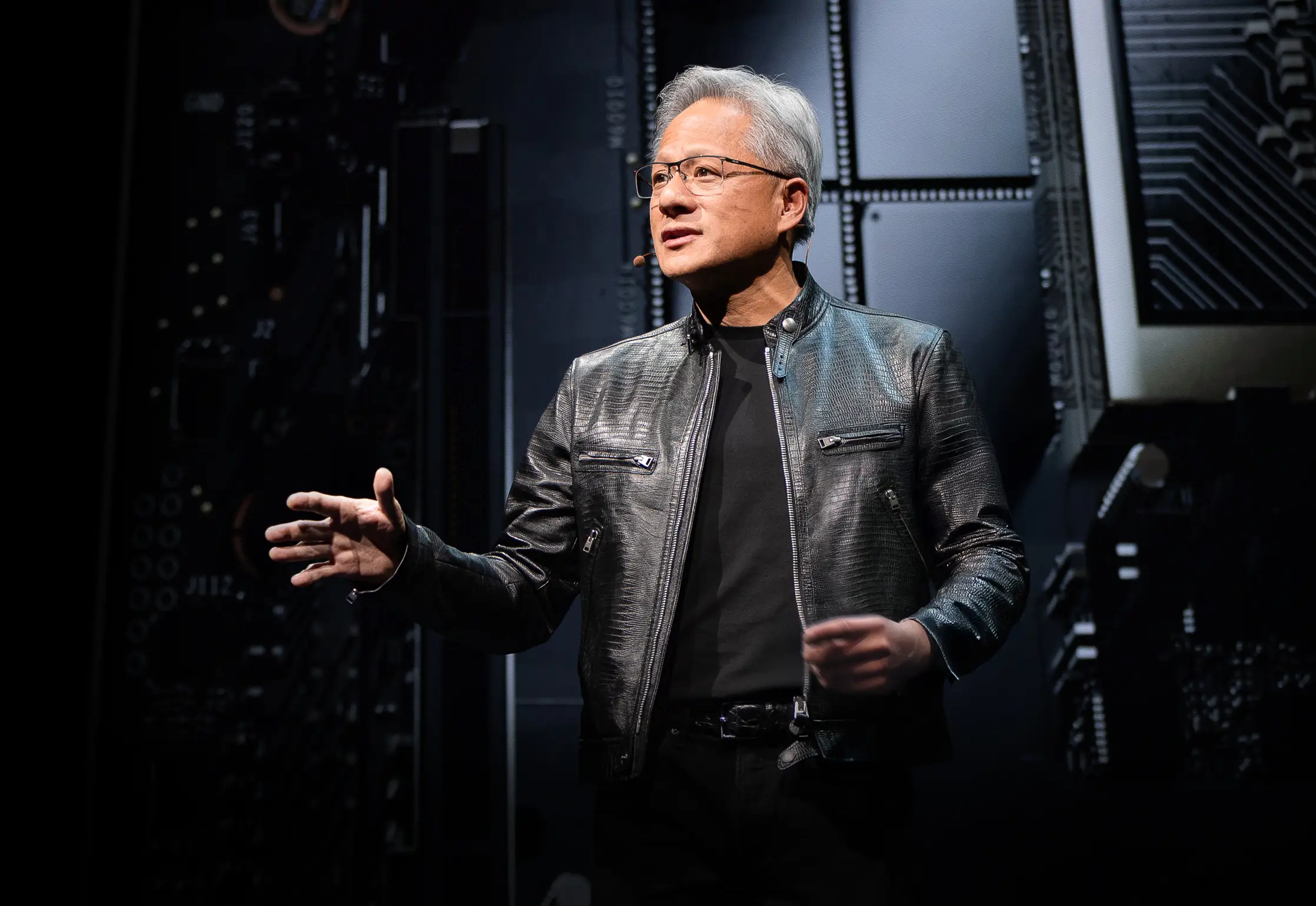

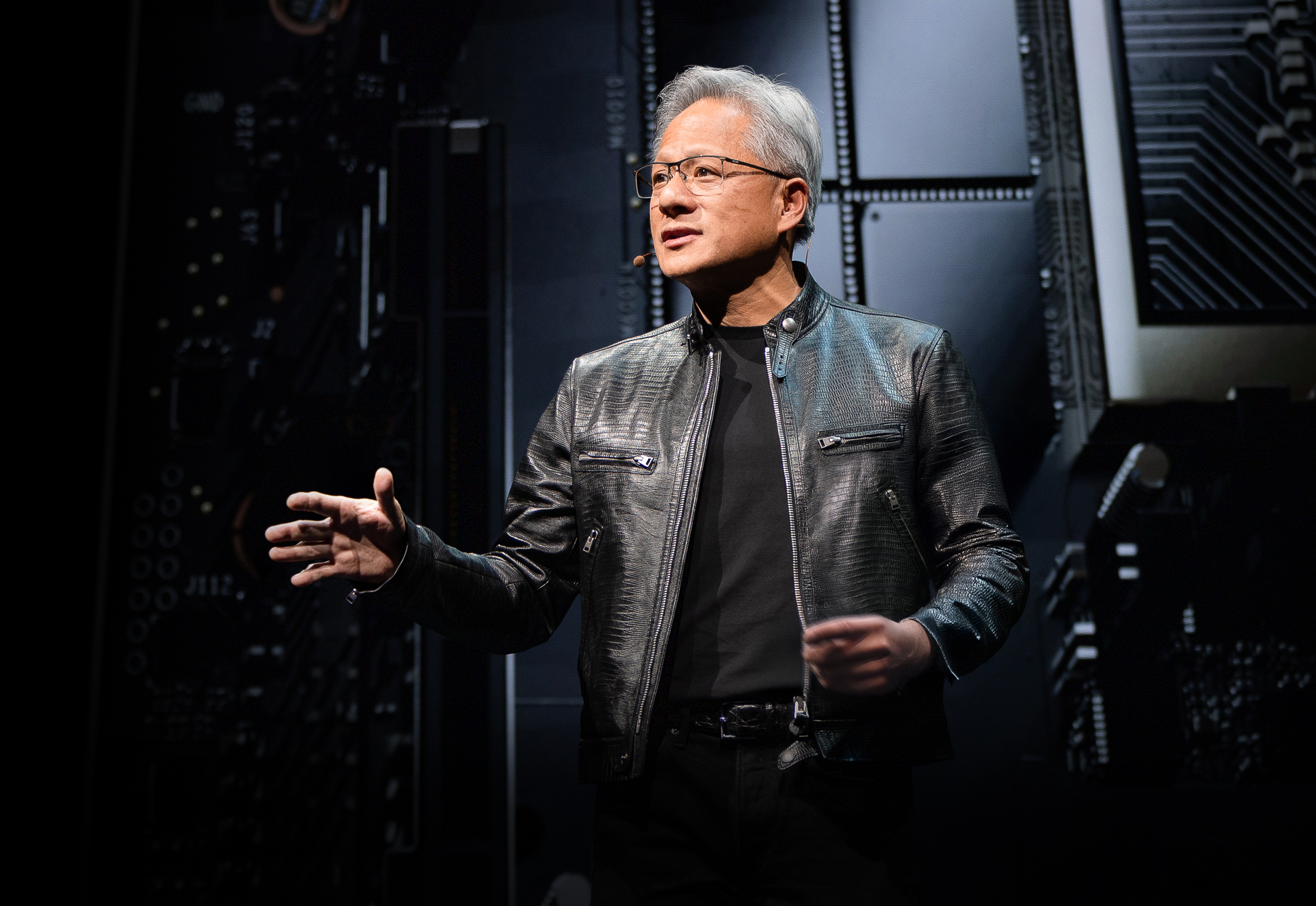

NVIDIA CEO Jensen Huang took the stage at Computex 2025 wearing his trademark leather jacket and unveiled new hardware and an entirely reimagined computing paradigm. The semiconductor giant has decisively transformed into an AI infrastructure company, building the foundations for what Huang calls "the infrastructure of intelligence"—the third major infrastructure revolution following electricity and the internet.

This keynote wasn't just another product announcement—it was Jensen laying out NVIDIA's blueprint for reshaping the computing landscape. The technical leaps, strategic pivots, and market plays he revealed will likely make this the Computex we'll reference for years. Watch the full Computex 2025 Nvidia keynote.

NVIDIA's Strategic Evolution: From Graphics Cards to Infrastructure Provider

NVIDIA's transformation story is mind-blowing. In 1993, Jensen saw a "$300 million chip opportunity"—a substantial market. Fast-forward to today, and he's commanding a trillion-dollar AI infrastructure juggernaut. That kind of explosive growth doesn't just happen—NVIDIA fundamentally reinvented itself multiple times along the way.

During his keynote, Jensen highlighted the turning points that made today's NVIDIA possible:

-

2006: CUDA landed and flipped parallel computing on its head. Suddenly, developers who'd never considered using GPUs for general computing were building applications that would have been impossible on traditional CPUs.

-

2016: The DGX1 emerged as NVIDIA's first no-compromises, AI-focused system. In what now looks like an almost eerie bit of foreshadowing, Jensen donated the first unit to OpenAI, effectively giving them the computational foundation to eventually lead to our current AI revolution.

-

2019: The acquisition of Mellanox, enabling NVIDIA to reconceptualize data centers as unified computing units

This transformation culminates in NVIDIA's current position as "an essential infrastructure company"—a status that Huang emphasized by highlighting its unprecedented five-year public roadmaps, which enable global infrastructure planning for AI deployment.

Redefining Performance Metrics: The Token Economy

NVIDIA has introduced a fundamental shift in how we measure computational output. Rather than traditional metrics like FLOPs or operations per second, Huang positioned AI data centers as factories producing "tokens"—units of computational intelligence:

"Companies are starting to talk about how many tokens they produced last quarter and how many tokens they produced last month. Very soon, we will discuss how many tokens we produce every hour, just as every factory does."

This reframing directly connects computational investment and business output, aligning AI infrastructure with traditional industrial frameworks. The model positions NVIDIA at the epicenter of a new economic paradigm where computational efficiency directly translates to business capability.

Blackwell Architecture Upgrades: GB300 Specifications and Performance Metrics

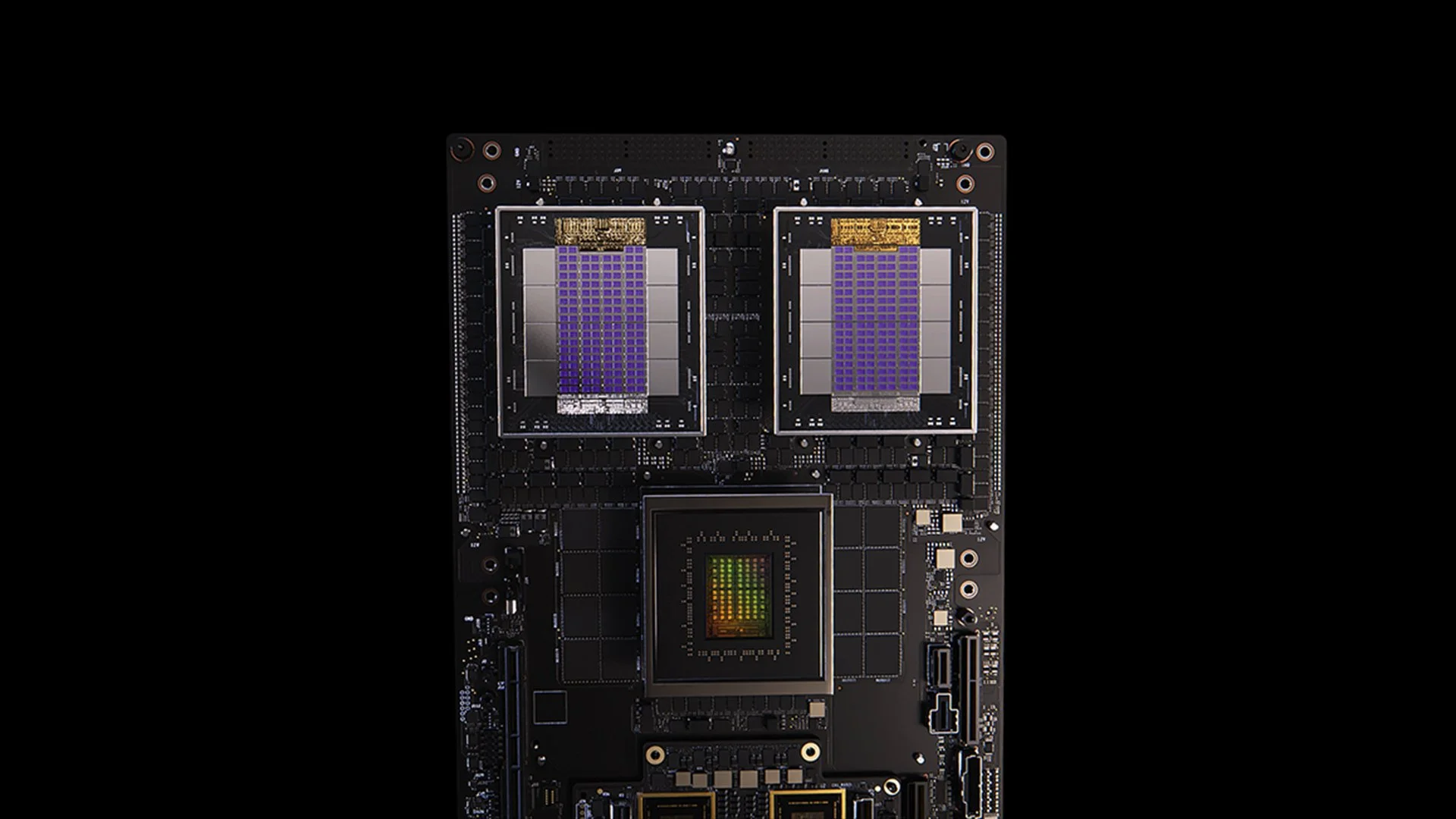

[caption id="" align="alignnone" width="1280"] GB200 system [/caption]

GB200 system [/caption]

The GB300 update to the Blackwell architecture exemplifies NVIDIA's relentless performance improvement cycle. Scheduled for Q3 2025, the GB300 delivers:

-

1.5x inference performance boost over GB200

-

1.5x HBM memory capacity increase

-

2x networking bandwidth improvement

-

Fully liquid-cooled design

-

Backward compatible with existing chassis and systems

Each GB300 node delivers approximately 40 petaflops—effectively replacing the entire Sierra supercomputer (circa 2018), which required 18,000 Volta GPUs. This 4,000x performance gain within six years vastly outpaces traditional Moore's Law scaling, demonstrating NVIDIA's multi-faceted approach to performance acceleration through architecture, software, and interconnect innovations.

MVLink: Redefining Interconnect Technology

MVLink represents the most significant advancement in GPU interconnect technology since NVLink's introduction. The system enables complete disaggregation of compute resources across an entire rack, turning 72 GPUs (144 GPU dies) into a single massive computational unit.

The technical specifications are staggering:

-

Individual MVLink switches: 7.2 TB/s bandwidth

-

MVLink spine: 130 TB/s all-to-all bandwidth

-

Physical implementation: 5,000 precisely length-matched coaxial cables (approximately 2 miles total)

-

Power density: 120 kilowatts per rack (necessitating liquid cooling)

For context, Huang noted that the peak traffic of the entire internet is approximately 900 terabits per second (112.5 TB/s), making a single MVLink spine capable of handling more traffic than the global internet at peak capacity.

MVLink Fusion: Creating an Open AI Infrastructure Ecosystem

MVLink Fusion might be the most innovative ecosystem play NVIDIA has made in years. Instead of forcing partners to go all-in on NVIDIA hardware, they're opening the architecture to let companies build semi-custom AI systems that still tie into the NVIDIA universe.

The approach is surprisingly flexible:

-

Custom ASIC Integration: Got your specialized accelerator? No problem. Partners can drop MVLink chiplets to connect their custom silicon to NVIDIA's ecosystem. It's like NVIDIA saying, "Build whatever specialized hardware you want—just make sure it can talk to our stuff."

-

Custom CPU Integration: CPU vendors aren't left out either. They can directly implement MVLink's chip-to-chip interfaces, creating a direct highway between their processors and Blackwell GPUs (or the upcoming Ruben architecture). MVLink is huge for companies invested in specific CPU architectures.

Partner announcements span the semiconductor industry:

-

Silicon implementation partners: LCHIP, Astera Labs, Marll, MediaTek

-

CPU vendors: Fujitsu, Qualcomm

-

EDA providers: Cadence, Synopsis

This approach strategically positions NVIDIA to capture value regardless of the specific hardware mix customers deploy, reflecting Huang's candid statement: "Nothing gives me more joy than when you buy everything from NVIDIA. I want you guys to know that. But it gives me tremendous joy if you buy something from NVIDIA."

Enterprise AI Deployment: RTX Pro Enterprise and Omniverse Server

The RTX Pro Enterprise and Omniverse server represent NVIDIA's most significant enterprise-focused compute offering, designed specifically to integrate AI capabilities into traditional IT environments:

-

Fully x86-compatible architecture

-

Support for traditional hypervisors (VMware, Red Hat, Nanix)

-

Kubernetes integration for familiar workload orchestration

-

Blackwell RTX Pro 6000s GPUs (8 per server)

-

CX8 networking chip providing 800 Gb/s bandwidth

-

1.7x performance uplift versus Hopper H100

-

4x performance on optimized models like Deepseek R1

The system establishes a new performance envelope for AI inference, measured in a dual-axis framework of throughput (tokens per second) and responsiveness (tokens per second per user)—critical metrics for what Huang describes as the era of "inference time scaling" or "thinking AI."

AI Data Platform: Reimagining Storage for Unstructured Data

NVIDIA's AI Data Platform introduces a fundamentally different approach to enterprise storage:

"Humans query structured databases like SQL... But AI wants to query unstructured data. They want semantic. They want meaning. And so we have to create a new type of storage platform."

Key components include:

-

NVIDIA AIQ (or IQ): A semantic query layer

-

GPU-accelerated storage nodes replacing traditional CPU-centric architectures

-

Post-trained AI models with transparent training data provenance

-

15x faster querying with 50% improved results compared to existing solutions

Storage industry partners implementing this architecture include Dell, Hitachi, IBM, NetApp, and Vast, creating a comprehensive enterprise AI data management ecosystem.

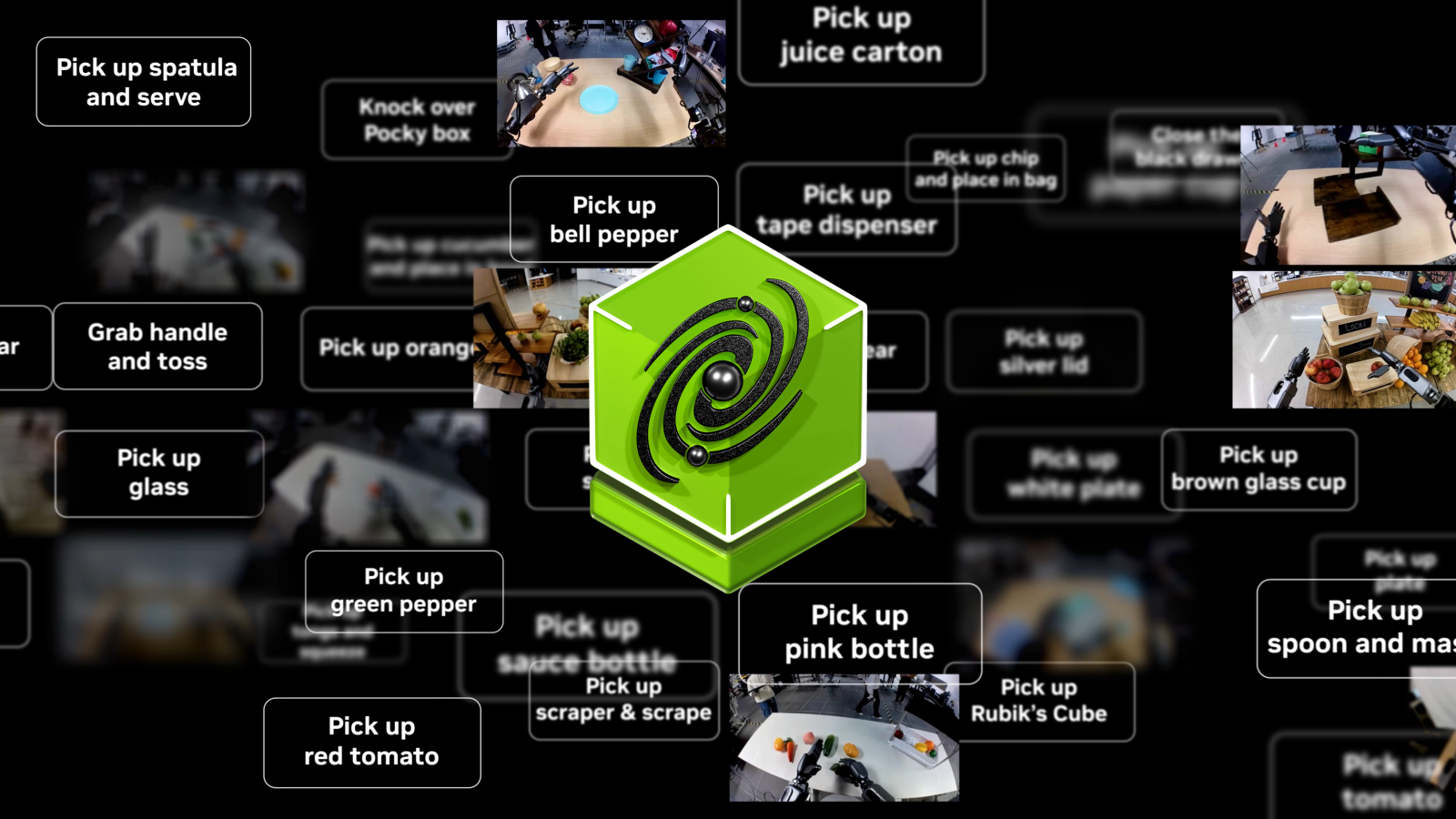

AI Operations and Robotics: Software Frameworks for the Intelligent Enterprise

The keynote introduced two crucial software frameworks:

-

AI Operations (AIOps): A comprehensive stack for managing AI agents in enterprise contexts, including data curation, model fine-tuning, evaluation, guardrails, and security. Partners include Crowdstrike, Data IQ, Data Robots, Elastic, Newonix, Red Hat, and Trend Micro.

-

Isaac Groot Platform N1.5: An open-sourced robotics development ecosystem including:

Newton physics engine (developed with Google DeepMind and Disney Research)

-

Jetson Thor robotics processor

-

NVIDIA Isaac operating system

-

Groot Dreams blueprint for synthetic trajectory data generation

The robotics initiative addresses a critical challenge: "For robotics to happen, you need AI. But to teach the AI, you need AI." This recursive optimization pattern leverages generative AI to expand limited human demonstration data into comprehensive robotics training sets.

Taiwan's Strategic Position in the AI Manufacturing Revolution

A significant portion of the keynote highlighted Taiwan's pivotal role in both producing and implementing AI technologies:

-

Taiwanese manufacturing companies (TSMC, Foxconn, Wistron, Pegatron, Delta Electronics, Quanta, Wiiwin, Gigabyte) are deploying NVIDIA Omniverse for digital twin implementations.

-

TSMC is using AI-powered tools on CUDA to optimize fab layouts and piping systems

-

Manufacturing partners use digital twins for virtual planning and predictive maintenance and as "robot gyms" for training robotic systems.

-

Foxconn, TSMC, the Taiwanese government, and NVIDIA are building Taiwan's first large-scale AI supercomputer.

Huang further cemented NVIDIA's commitment to the region by announcing plans for "NVIDIA Constellation," a new headquarters facility in Taipei.

Technical Analysis: What This Means for Enterprise AI Strategy

These announcements collectively represent a comprehensive reimagining of enterprise computing with several strategic implications:

-

Computational Scale Requirements: The inference-time performance demands of "reasoning AI" and agentic systems will drive significantly higher compute requirements than initial large language model deployments, necessitating architectural planning for massive scale-up and scale-out capabilities.

-

Disaggregation of Enterprise AI: The MVLink Fusion ecosystem enables unprecedented flexibility in building heterogeneous AI systems, potentially accelerating the adoption of specialized AI accelerators while maintaining NVIDIA's position in the ecosystem through interconnect technology.

-

Shift from Data Centers to AI Factories: We need to completely rethink how we measure the value of our infrastructure investments. Gone are the days when raw compute or storage capacity told the whole story. Now it's all about token production—how many units of AI output can your systems generate per second, per watt, and dollar? Jensen wasn't kidding when he said companies would soon report their token production, such as manufacturing metrics. The shift to AI factories will fundamentally rewrite the economics of how we deploy and justify AI infrastructure spending.

-

Digital Twin Integration: The fact that every major Taiwanese manufacturer is building Omniverse digital twins tells us everything we need to know—this isn't just a cool tech demo anymore. Digital twins have become essential infrastructure for companies serious about optimization. What's particularly fascinating is how this creates a feedback loop: companies build digital twins to optimize physical processes, then use those same environments to train AI and robotics, further improving the physical world. It's a continuous improvement cycle that keeps accelerating.

-

Robotic Workforce Planning: The convergence of agentic AI and physical robotics suggests organizations should develop integrated digital and physical automation strategies, with significant implications for workforce planning and facility design.

-

Software-Defined Infrastructure: Despite the hardware announcements, NVIDIA's continued emphasis on libraries and software frameworks reinforces that competitive advantage in AI will come from software optimization as much as raw hardware capabilities.

Navigating the AI Factory Transition

Transforming traditional data centers into AI factories requires specialized expertise that bridges hardware deployment, software optimization, and architectural design. At Introl, we've been implementing these advanced GPU infrastructure solutions for enterprises leaping into AI-first computing. Our team's deep experience with NVIDIA's ecosystem—from complex MVLink deployments to Omniverse digital twin implementations—helps organizations navigate this paradigm shift without the steep learning curve typically associated with cutting-edge infrastructure. Whether scaling up reasoning AI capabilities or building out your first AI factory floor, partnering with specialists can dramatically accelerate your time-to-value in this rapidly evolving landscape. Ready to get **it done? Set up a call today.

Conclusion: Computing's Third Era Has Arrived

Computex wasn't just NVIDIA showing off faster chips. What Jensen laid out blew past the usual "20% better than last year" announcements we've grown numb to. He's fundamentally reframing what computers are for. We've spent decades building machines that crunch numbers and move data around. Now, NVIDIA is building systems that manufacture intelligence as their primary output. It's like comparing a filing cabinet to a brain. Sure, both store information, but one sits there while the other creates new ideas. The shift might sound like semantics until you realize it changes everything about how we build, deploy, and measure computing systems.

"For the first time in all of our time together, not only are we creating the next generation of IT, but we've also done that several times, from PC to internet to cloud to mobile cloud. We've done that several times. But this time, not only are we creating the next generation of IT, we are creating a whole new industry."

This transition represents the third major computing paradigm shift, following the personal computing revolution and the internet/cloud era. Organizations integrating these AI infrastructure capabilities will likely establish insurmountable competitive advantages across industries.

The factories of computational intelligence are under construction today. The question is no longer whether AI will transform business—it's whether your organization is building the infrastructure to remain competitive in a world where computational intelligence becomes as fundamental to business operations as electricity.

## References and Additional Resources

-

NVIDIA Official Blackwell Architecture Overview: https://www.nvidia.com/en-us/data-center/technologies/blackwell-architecture/

-

NVIDIA MVLink Technical Documentation: https://developer.nvidia.com/mvlink

-

NVIDIA Omniverse Platform: https://www.nvidia.com/en-us/omniverse/

-

Isaac Robotics Platform: https://developer.nvidia.com/isaac-ros

-

NVIDIA AI Enterprise: https://www.nvidia.com/en-us/data-center/products/ai-enterprise/

-

NVIDIA Computex 2025 Official Press Materials: https://nvidianews.nvidia.com/news/computex-2025

-

NVIDIA CUDA-X Libraries Overview: https://developer.nvidia.com/gpu-accelerated-libraries

-

NVIDIA DGX Systems: https://www.nvidia.com/en-us/data-center/dgx-systems/