Trump Lets Nvidia Sell H200 Chips to China for 25% Revenue Cut

The Trump administration reversed export controls on Nvidia's H200 chips to China, demanding 25% of revenue. Here's what the policy shift means for AI infrastructure.

Deep dives into NVIDIA, AMD, and Intel accelerators—H100, H200, Blackwell, MI300X, Gaudi, and the silicon powering AI.

The AI revolution runs on silicon. From training frontier models to deploying inference at scale, GPU selection is the single most impactful infrastructure decision enterprises make today.

This hub covers everything data center architects need to know about AI accelerators: NVIDIA's H100 and H200 GPUs, the next-generation Blackwell B200, AMD's MI300X challenging for HPC workloads, and Intel's Gaudi chips offering alternatives to the NVIDIA ecosystem.

Whether you're specifying your next GPU cluster or evaluating multi-vendor strategies, our technical analysis helps you cut through marketing noise and make informed infrastructure decisions.

The Trump administration reversed export controls on Nvidia's H200 chips to China, demanding 25% of revenue. Here's what the policy shift means for AI infrastructure.

Trump reverses Biden export restrictions, allowing NVIDIA H200 sales to China with 25% surcharge. Blackwell GPUs remain restricted. Tariff-based controls replace bans.

DOJ shuts down $160M NVIDIA chip smuggling network to China. First AI diversion conviction. H100/H200 GPUs relabeled as 'SANDKYAN.' Operation Gatekeeper ongoing.

OpenAI and NVIDIA announce a $100 billion partnership to deploy 10 gigawatts of AI infrastructure, with the Vera Rubin platform delivering eight exaflops starting in 2026.

Dual RTX 5090s match H100 performance for 70B models at 25% cost. Complete hardware pricing guide for local LLM deployment from consumer to enterprise GPUs.

FP4 inference delivers 25-50x energy efficiency with 3.5x memory reduction. DeepSeek-R1 hits 250+ tokens/sec. The $0.02/token era arrives.

NVIDIA's GB300 NVL72 delivers 1.5x more AI performance than GB200 with 72 Blackwell Ultra GPUs, 288 GB memory per GPU, and 130 TB/s NVLink bandwidth. Here's what deployment engineers need to know abou...

NVIDIA's H100, H200, and B200 GPUs each serve different AI infrastructure needs—from the proven H100 workhorse to the memory-rich H200 and the groundbreaking B200. We break down real-world performance...

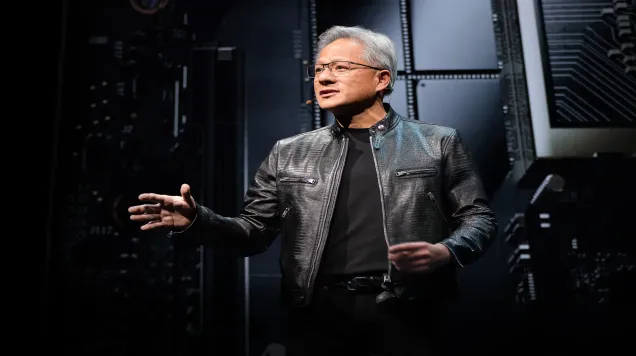

NVIDIA CEO Jensen Huang's Computex 2025 keynote wasn't just another product launch—it was the blueprint for computing's third major revolution. As data centers transform into token-producing 'AI facto...

Tell us about your project and we'll respond within 72 hours.

Thank you for your inquiry. Our team will review your request and respond within 72 hours.