Local LLM Hardware Guide 2025: Pricing & Specifications

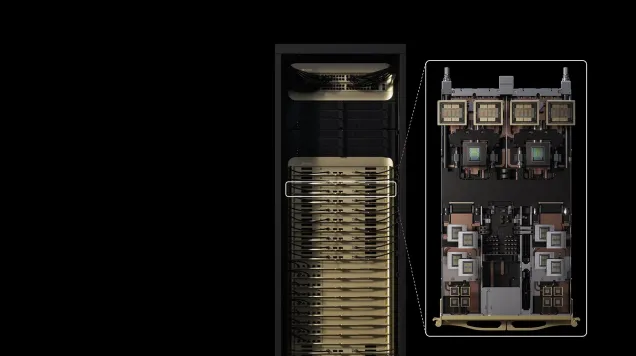

Dual RTX 5090s match H100 performance for 70B models at 25% cost. Complete hardware pricing guide for local LLM deployment from consumer to enterprise GPUs.

Our most valuable guides on GPU infrastructure, AI hardware, and data center technology. Start here for expert insights that help you make better decisions.

Dual RTX 5090s match H100 performance for 70B models at 25% cost. Complete hardware pricing guide for local LLM deployment from consumer to enterprise GPUs.

DeepSeek-V3.2 matches GPT-5 on mathematical reasoning at 10× lower cost. Open source, MIT licensed, with architectural innovations enabling frontier AI economics.

Google's TPU architecture powers Gemini 2.0 and Claude through 256×256 systolic arrays, optical circuit switching, and 42.5 exaflop superpods explained.

NVIDIA's H100, H200, and B200 GPUs each serve different AI infrastructure needs—from the proven H100 workhorse to the memory-rich H200 and the groundbreaking B200. We break down re...

NVIDIA Vera Rubin pushes data centers to 600kW racks by 2027, delivering 7.5x performance gains while demanding complete infrastructure transformation.

Tech giants commit $10B+ to small modular reactors powering AI data centers. First SMR facilities online by 2030 as nuclear meets AI's 945 TWh energy demand.

DeepSeek's new Manifold-Constrained Hyper-Connections framework solves a decade-old scaling problem, enabling stable training of 27B+ parameter models with just 6.7% overhead.

Tell us about your project and we'll respond within 72 hours.

Thank you for your inquiry. Our team will review your request and respond within 72 hours.